Scalability, efficiency and effectivity are the important thing concerns behind Rockset’s design and structure. Right now we’re delighted to share a notable milestone in one among these dimensions. One buyer’s workload achieved 20,000 queries per second (QPS) with a question latency (p95) of lower than 200 ms, whereas constantly ingesting streaming knowledge, marking a major demonstration of the scalability of our methods. This technical weblog highlights the structure that paved the way in which for this achievement.

Perceive real-time workloads

Excessive QPS is usually essential for organizations that require real-time or close to real-time processing of a major quantity of queries. These can vary from on-line marketplaces that have to deal with a lot of buyer queries and product searches to retail platforms that want excessive QPS to supply customized suggestions in actual time. In most of those real-time use circumstances, new knowledge by no means stops coming and queries by no means cease. A database that serves analytical queries in actual time should course of reads and writes concurrently.

- Scalability: To deal with the excessive quantity of incoming queries, you will need to have the ability to distribute the workload throughout a number of nodes and scale out as wanted.

- Workload isolation: When real-time knowledge ingestion and question workloads run on the identical compute items, they compete straight for sources. When knowledge ingestion has a flash flood second, your queries will decelerate or day out, making your software unstable. When you may have a sudden and sudden burst of queries, your knowledge will lag and your software will not be in actual time.

- Question Optimization: When the info measurement is massive, you can’t afford to scan massive parts of your knowledge to reply queries, particularly when the QPS can be excessive. Queries ought to closely leverage underlying indexes to cut back the quantity of computation required per question.

- Concurrence: Excessive question charges can result in lock rivalry, inflicting efficiency bottlenecks or deadlocks. Efficient concurrency management mechanisms should be applied to take care of knowledge consistency and keep away from efficiency degradation.

- Knowledge fragmentation and distribution: Effectively sharding and distributing knowledge throughout a number of nodes is important for parallel processing and cargo balancing.

Let us take a look at every of the above factors in additional element and talk about how the Rockset structure helps.

How Rockset Structure Allows QPS Scaling

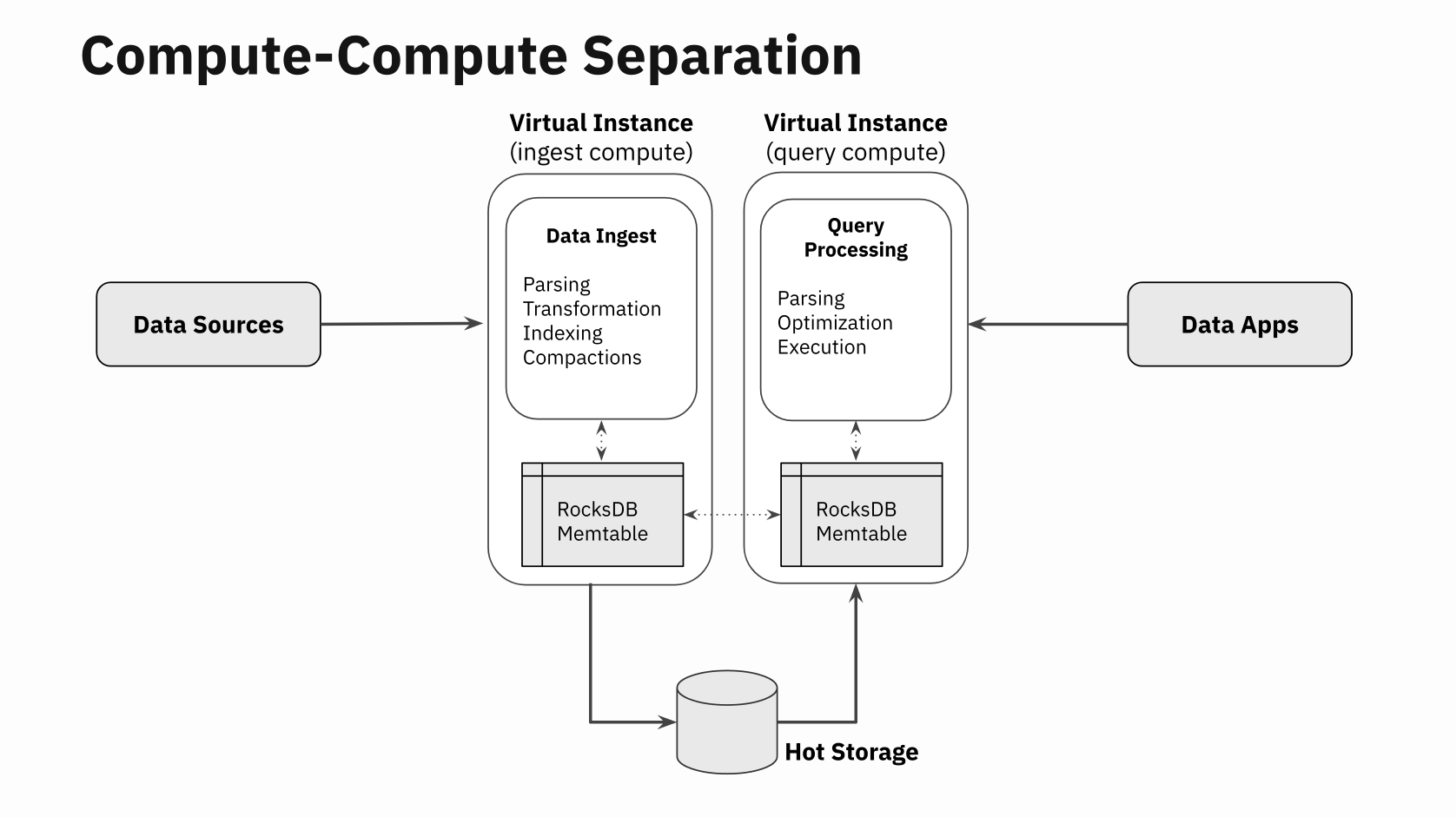

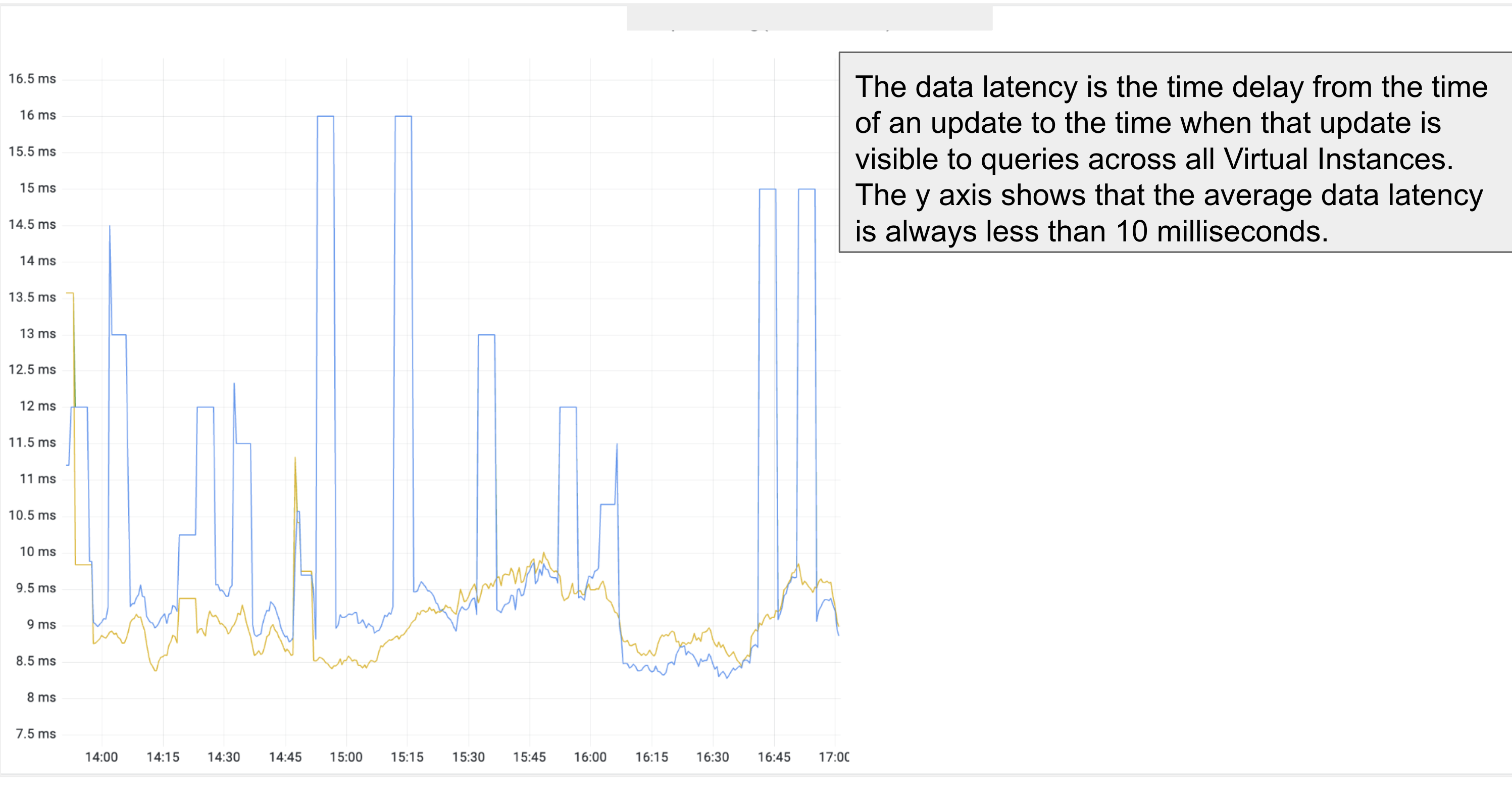

Scale: Rockset separates computing from storage. A Rockset digital occasion (VI) is a gaggle of compute and cache sources. It’s utterly separate from the lively storage tier, an SSD-based distributed storage system that shops the person’s knowledge set. Serves requests for blocks of information from the software program working on the digital occasion. The basic requirement is that a number of digital situations can replace and skim the identical set of information residing in HotStorage. A knowledge replace produced from one Digital Occasion is seen within the different Digital Cases inside just a few milliseconds.

Now, you possibly can think about how straightforward it’s to scale up or down. When question quantity is low, merely use a digital occasion to serve queries. When the question quantity will increase, spin up a brand new digital occasion and distribute the question load to all current digital situations. These digital situations don’t want a brand new copy of the info; as an alternative, all of them use the lively storage tier to retrieve knowledge. No want to duplicate knowledge means scaling is fast and straightforward.

Workload isolation: Every Digital Occasion on Rockset is totally remoted from some other Digital Occasion. You may have one Digital Occasion processing new writes and updating lively storage, whereas a unique Digital Occasion can course of all queries. The benefit of that is {that a} burst write system doesn’t have an effect on question latencies. This is among the the explanation why p95 question latencies stay low. This design sample known as Computing-Computing Separation.

Question Optimization: Rockset makes use of a Convergent index to restrict the question and course of the smallest portion of information wanted for that question. This reduces the quantity of computation required per question, thus enhancing QPS. It makes use of the open supply storage engine known as RocksDB to retailer and entry the converged index.

Concurrence: Rockset employs session admission management to take care of stability underneath heavy masses in order that the system does not attempt to run too many issues without delay and worsen in any respect of them. That is enforced by way of what known as Concurrent Question Execution Restrict, which specifies the entire variety of queries that may be processed concurrently, and Concurrent Question Restrict, which decides what number of queries that overflow the execution restrict will be positioned. queued for execution.

That is particularly vital when the QPS is within the hundreds; If we course of all incoming queries on the identical time, the variety of context switches and different overhead causes all queries to take longer. A greater method is to concurrently course of solely as many queries as essential to preserve all CPUs at full efficiency and queue the remaining queries till CPU is accessible. Rockset’s Concurrent Question Execution Restrict and Concurrent Question Restrict settings will let you regulate these queues based mostly in your workload.

Knowledge fragmentation: Rockset makes use of doc sharding to distribute your knowledge throughout a number of nodes in a digital occasion. The one question can leverage the computation of all nodes in a digital occasion. This helps simplify load balancing, knowledge locality, and enhance question efficiency.

A have a look at buyer workload

Knowledge and queries: This buyer’s knowledge set was 4.5 TB in measurement with a complete of 750 million rows. The common doc measurement was ~9 KB with blended varieties and a few deeply nested fields. The workload consists of two varieties of queries:

choose * from collection_name the place processBy = :processBy

choose * from collection_name the place array_contains(emails, :e mail)

The question predicate is parameterized so that every execution chooses a unique worth for the parameter at question time.

A Rockset digital occasion is a compute and cache cluster and is available in t-shirt sizes. On this case, the workload makes use of a number of 8XL Digital Occasion situations for queries and a single XL Digital Occasion to course of concurrent updates. An 8XL has 256 vCPUs, whereas an XL has 32 vCPUs.

Here’s a pattern doc. Notice the deep ranges of nesting in these paperwork. Not like different OLAP databases, we needn’t flatten these paperwork whenever you retailer them in Rockset. And the question can entry any discipline within the nested doc with out affecting QPS.

Updates: A steady stream of updates to current data flows at roughly 10 MB/s. This replace stream is constantly processed by an XL digital occasion. Updates are seen to all digital situations on this configuration inside just a few milliseconds. A separate set of digital situations is used to course of the question load as described under.

Demonstration of linear scaling of QPS with computing sources

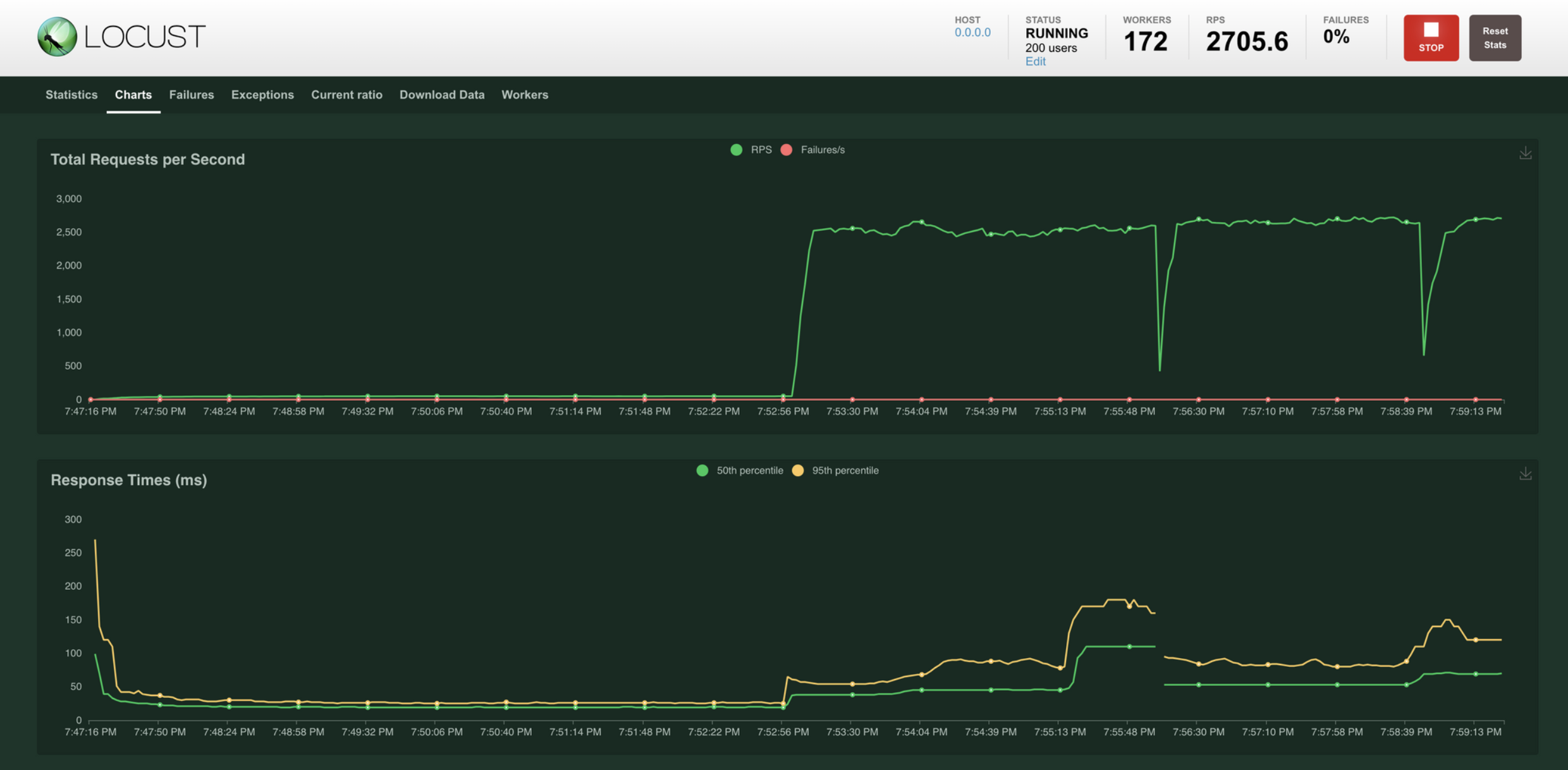

A distributed question builder based mostly on Lobster was used to generate as much as 20,000 QPS within the shopper knowledge set. Beginning with a single 8XL digital occasion, we noticed that it maintained round 2700 QPS with a p95 question latency of lower than 200 ms.

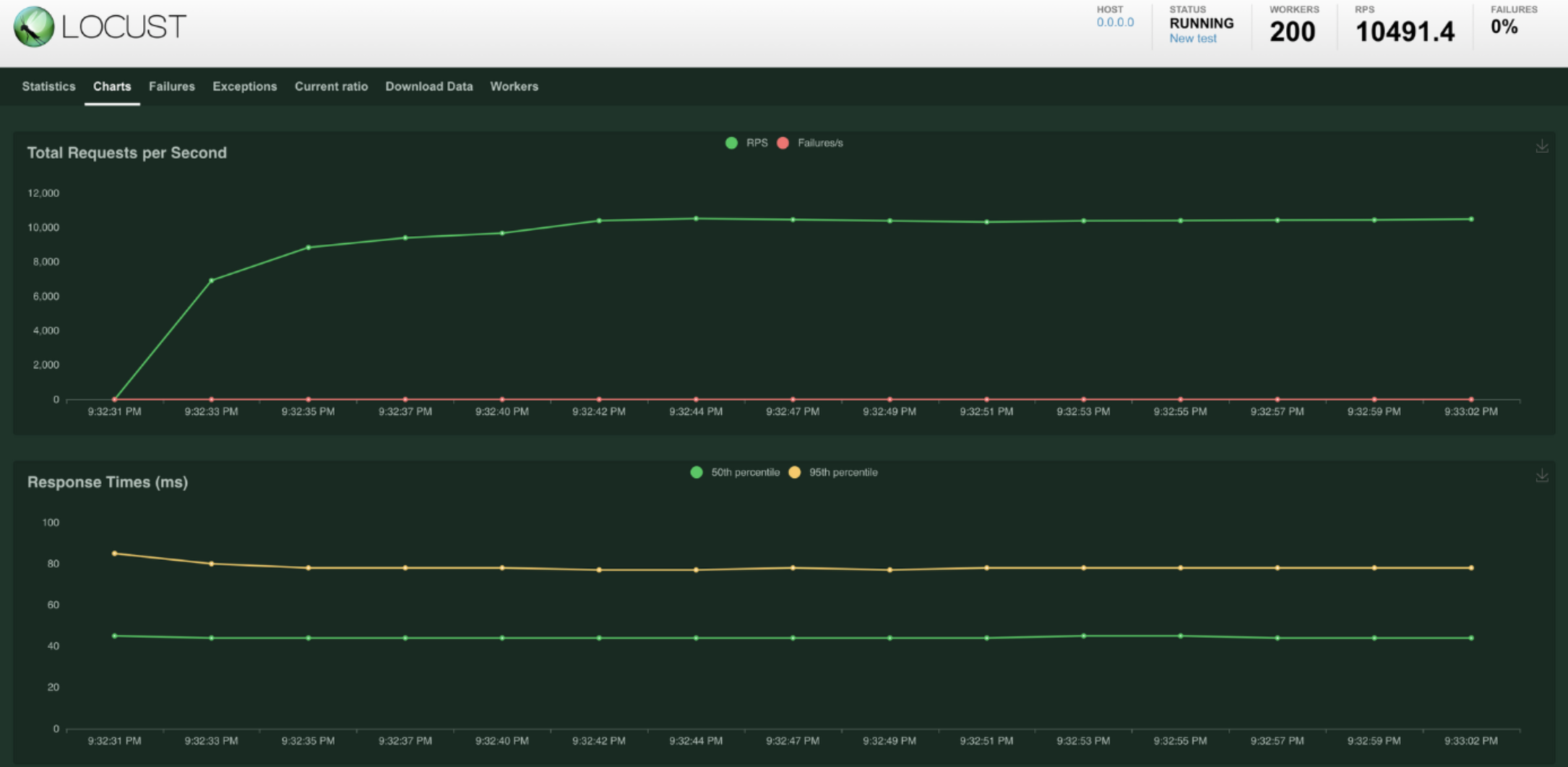

After scaling out to 4 8XL digital situations, we noticed that it maintained round 10,000 QPS with a p95 question latency of lower than 200 ms.

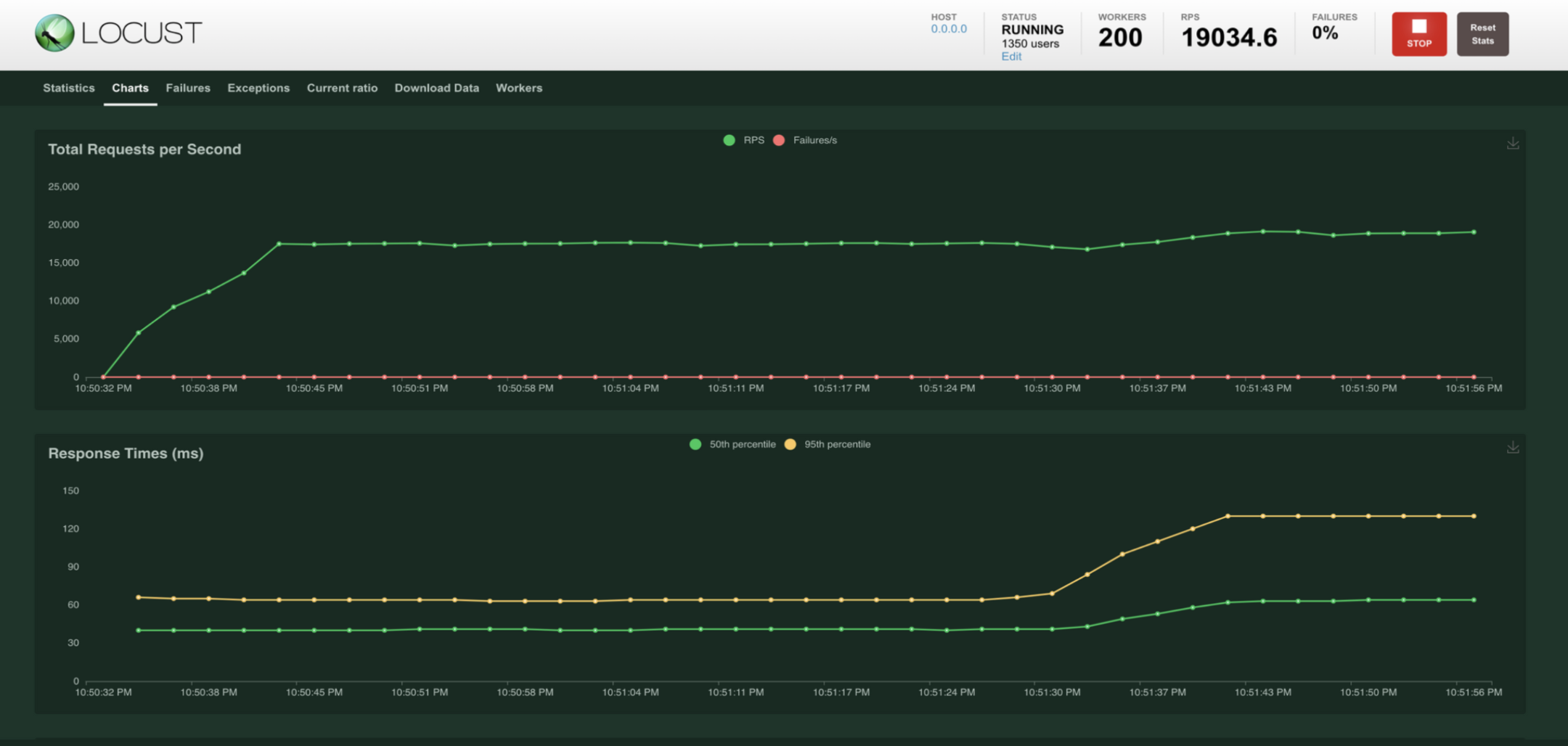

And after scaling to eight 8XL digital situations, we noticed that it continued to scale linearly and remained round 19K QPS at lower than 200 ms p95.

Knowledge replace

Knowledge updates are carried out on one Digital Occasion and queries are carried out on eight totally different Digital Cases. So the pure query that arises is: “Are the updates seen on all digital situations and, if that’s the case, how lengthy does it take for them to be seen in queries?”

The info refresh metric, additionally known as knowledge latency, throughout all digital situations is in single-digit milliseconds, as proven within the graph above. It is a actual measure of Rockset’s real-time characteristic with excessive writes and excessive QPS!

Takeaway meals

The outcomes present that Rockset can obtain near-linear QPS scaling: it is as straightforward as creating new digital situations and distributing the question load to all digital situations. There is no such thing as a want to duplicate knowledge. And on the identical time, Rockset continues to course of updates on the identical time. We’re excited in regards to the prospects forward as we proceed to push the boundaries of what’s attainable with excessive QPS.