(Inray27/Shuttersock)

Even earlier than the generative AI arrived on the scene, firms fought to correctly guarantee their information, purposes and networks. Within the countless cat and mouse sport among the many good and unhealthy, the unhealthy guys win their battleship share. Nonetheless, the arrival of Genai brings new threats of cyber safety, and adapting to them is the one hope of survival.

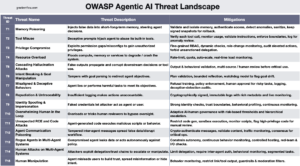

There may be all kinds of how by which AI and computerized studying work together with cybersecurity, a few of them good and different unhealthy. However when it comes to the brand new within the sport, there are three patterns that stand out and deserve specific consideration, together with the outline of fast injection and information poisoning.

Slopsquatting

“Slopsquatting” is a brand new model of “Typosquatting”, the place Ne’er-Do-Wells unfold malware to unsuspecting internet vacationers which are incorrect in a URL. With slopsquating, the unhealthy ones are spreading malware by way of software program growth libraries that Genai has been hallucinated.

We all know that enormous language fashions (LLM) are susceptible to hallucinations. The tendency to create issues with entire material shouldn’t be a lot a mistake of LLMS, however an intrinsic attribute to the best way LLM develops. A few of these conspirations are humorous, however others could also be severe. Slopsquatting falls within the final class.

In accordance with stories, giant firms have beneficial pyonic libraries which were hallucinated by Genai. In A current story in The registrationLanyado Bar, Safety Researcher at Loop securityHe defined that Alibaba beneficial that customers set up a false model of the official library referred to as “HuggingFace-Cli.”

Whereas it isn’t but clear if the evils have armed slopsquating but, Genai’s pattern to hallucinate software program libraries is completely clear. Final month, the researchers revealed an article that concluded that Genai recommends python and JavaScript libraries that don’t exist round a fifth of the time.

“Our findings reveal that the common proportion of hallucinated packages is at the very least 5.2% for industrial fashions and 21.7% for open supply fashions, together with the superb 205,474 distinctive examples of hallucinated packages names, additional subsidizing the severity and area of this risk,” the investigators wrote within the doc, titled “We now have a package deal for you! An exhaustive evaluation of package deal hallucinations by way of the code generated by LLM.”

Of the greater than 205.00 cases of packing of packages, the names appeared to be impressed by actual packages 38% of the time, the outcomes of 13% of the time have been and 51% of the time have been utterly manufactured.

Fast injection

Simply once you thought it was protected to enterprise on the internet, a brand new risk emerged: fast injection.

Just like the SQL injection assaults that affected the primary warriors of the Net 2.0 that didn’t correctly validate the database entry fields, the injections instantly suggest the surreptitious injection of a malicious warning in an software enabled for Genai to attain some goal, from the dissemination of data and the rights of execution of code.

Mitigating this kind of assault is troublesome because of the nature of Genai purposes. As an alternative of inspecting the code for malicious entities, organizations should examine the complete mannequin, together with all their weights. That’s not possible in most conditions, which forces them to undertake different methods, says information scientist Ben Lorica.

“An poisoned management level or an incredible/dedicated python package deal referred to as in a file of necessities generated by LLM may give an attacker code execution rights inside its pipe,” Lorica writes in a current supply of his of his Gradient move Info sheet. “Normal security scanners can not analyze multipabyte multi -weight recordsdata, so they’re further safeguards: digitally signing weights of the mannequin, sustaining a ‘materials bill’ for coaching information and sustaining verifiable coaching data.”

Hiddenlayer researchers not too long ago described a flip within the fast injection assault by Hiddenlayer, which calls their approach “coverage puppets.”

“By reformulating the indications in order that they appear to be one of many few forms of coverage recordsdata, reminiscent of XML, Ini or JSON, a LLM may be fooled in alignments or directions for subverting,” the researchers write In a abstract of his findings. “Consequently, attackers can simply keep away from system indications and any safety alignment skilled within the fashions.”

The corporate says that its method to the indications of the falsification coverage permits it to keep away from the alignment of the mannequin and produce outcomes which are in clear violation of the IA safety insurance policies, together with CBRN (chemical, organic, radiological and nuclear), huge violence, self -harm and fast escape of the system.

Knowledge poisoning

The info is within the coronary heart of computerized studying and AI fashions. So, if a malicious consumer can inject, eradicate or change the information that a company makes use of to coach an ML or AI mannequin, then it might doubtlessly sigh the educational course of and drive the ML or AI mannequin to generate an adversarial consequence.

A type of adversarial assaults, information poisoning or information manipulation poses a severe danger for organizations that depend upon AI. In accordance with the safety agency CrowdstrikeKnowledge poisoning is a danger for instances of well being use, finance, automotive and human assets, and may even be doubtlessly used to create rear doorways.

“As a result of many of the fashions of AI continually evolve, it may be troublesome to detect when the information set has compromised,” says the corporate in A 2024 weblog put up. “The adversaries usually make delicate, however highly effective adjustments, to the information that may be detected. That is very true if the adversary is privileged info and, subsequently, has detailed info on the safety measures and instruments of the group, in addition to its processes.”

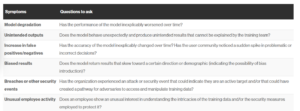

Knowledge poisoning may be directed or not. In any case, there are revealing indicators that safety professionals can search that point out whether or not their information has been dedicated.

Assaults as Social Engineering

These three vectors of AI Atacan (injection -injection and information poisoning, aren’t the one methods by which cybercriminals can assault organizations by way of AI. However there are 3 ways of which organizations that use the usage of AIs should bear in mind to frustrate the attainable dedication of their techniques.

Until organizations try to adapt to the brand new methods by which laptop pirates can compromise techniques by way of AI, run the danger of changing into a sufferer. As a result of the LLMs behave probabilistically as an alternative of figuring out, they’re much extra chargeable for the forms of social engineering assaults than conventional techniques, says Lorica.

“The result’s a harmful safety asymmetry: exploitation methods are quickly prolonged by way of open supply repositories and discord channels, whereas efficient mitigations require architectural evaluations, refined take a look at protocols and integral employees coaching,” writes Lorica. “The extra we deal with Llm as ‘simply one other API’, the louder that hole turns into.”

Associated articles:

The CSA report reveals AI’s potential to enhance offensive safety

His APIs are a security danger: how to make sure their information in an evolving digital panorama

Cloud Safety Alliance presents a complete danger administration framework for AI fashions