Sooner, smarter and extra responsive AI functions – that is what your customers count on. However when massive language fashions (LLMs) are sluggish to reply, the consumer expertise suffers. Each millisecond counts.

With Cerebras high-speed inference endpoints, you’ll be able to cut back latency, velocity up mannequin responses, and keep high quality at scale with fashions like Llama 3.1-70B. By following a number of easy steps, you’ll be able to customise and implement your individual LLMs, supplying you with the management to optimize each velocity and high quality.

On this weblog, we’ll clarify easy methods to:

- Set Llama 3.1-70B on the DataRobot LLM Playground.

- Generate and apply an API key to leverage Cerebras for inference.

- Customise and deploy smarter, sooner functions.

Finally, you will be able to implement LLMs that ship velocity, accuracy, and real-time responsiveness.

Prototype, customise and check LLM in a single place

Prototyping and testing generative AI fashions typically requires a patchwork of disconnected instruments. However with a unified and built-in setting for LLMrestoration methods and analysis metrics, you’ll be able to go from an thought to a working prototype sooner and with fewer obstacles.

This simplified course of It means you’ll be able to give attention to creating efficient, high-impact AI functions with out the effort of bringing collectively instruments from completely different platforms.

Let us take a look at a use case to see how one can leverage these capabilities to Develop smarter, sooner AI functions.

Use case: Speed up LLM interference with out sacrificing high quality

Low latency is crucial for creating quick and responsive AI functions. However accelerated responses don’t have to return on the expense of high quality.

the velocity of Mind inference outperforms different platforms, permitting builders to create apps that really feel fluid, responsive, and good.

When mixed with an intuitive improvement expertise, you’ll be able to:

- Scale back LLM latency for sooner consumer interactions.

- Experiment extra effectively with new fashions and workflows.

- Deploy functions that reply immediately to consumer actions.

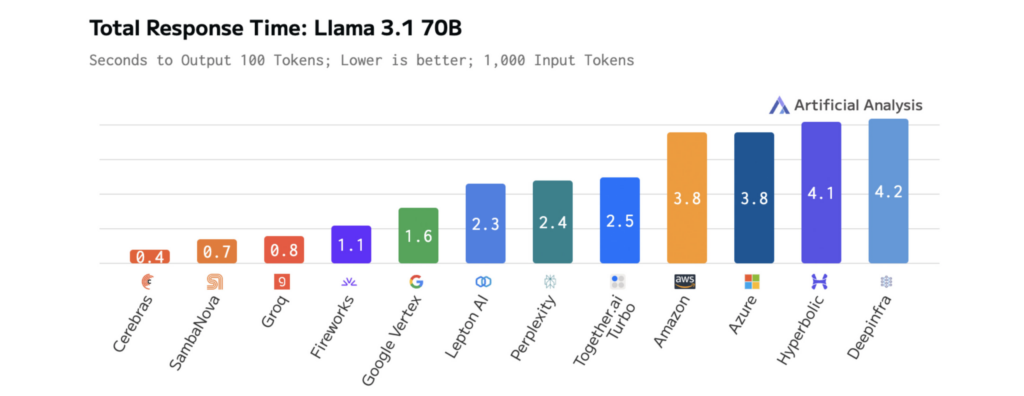

The next diagrams present the efficiency of Cerebras en Llama 3.1-70B, illustrating sooner response occasions and decrease latency than different platforms. This permits for fast iteration throughout improvement and real-time efficiency in manufacturing.

How mannequin dimension impacts LLM velocity and efficiency

As LLMs develop and turn out to be extra complicated, their outcomes turn out to be extra related and complete, however this comes at a value: elevated latency. Cerebras addresses this problem with streamlined calculations, optimized knowledge switch, and clever decoding designed for velocity.

These velocity enhancements are already remodeling AI functions in industries resembling prescription drugs and voice AI. For instance:

- GlaxoSmithKline (GSK) makes use of Cerebras Inference to speed up drug discovery, driving higher productiveness.

- reside equipment has boosted the efficiency of ChatGPT’s voice mode channel, attaining sooner response occasions than conventional inference options.

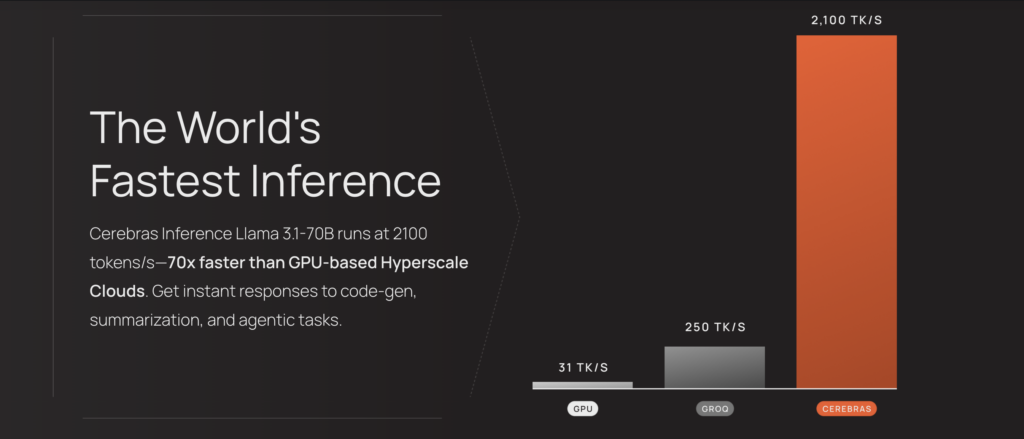

The outcomes are measurable. In Llama 3.1-70B, Cerebras delivers 70x sooner inference than entry-level GPUs, enabling smoother real-time interactions and sooner experimentation cycles.

This efficiency is powered by Cerebras’ third-generation Wafer-Scale Engine (WSE-3), a customized processor designed to optimize tensor-based sparse linear algebra operations that drive LLM inference.

By prioritizing efficiency, effectivity and adaptability, the WSE-3 ensures sooner and extra constant outcomes throughout mannequin efficiency.

The velocity of Cerebras Inference reduces the latency of AI functions pushed by its fashions, enabling deeper reasoning and extra responsive consumer experiences. Accessing these optimized fashions is easy: they’re hosted on Cerebras and might be accessed via a single endpoint, so you can begin benefiting from them with minimal configuration.

Step by Step: Customise and Deploy Llama 3.1-70B for Low Latency AI

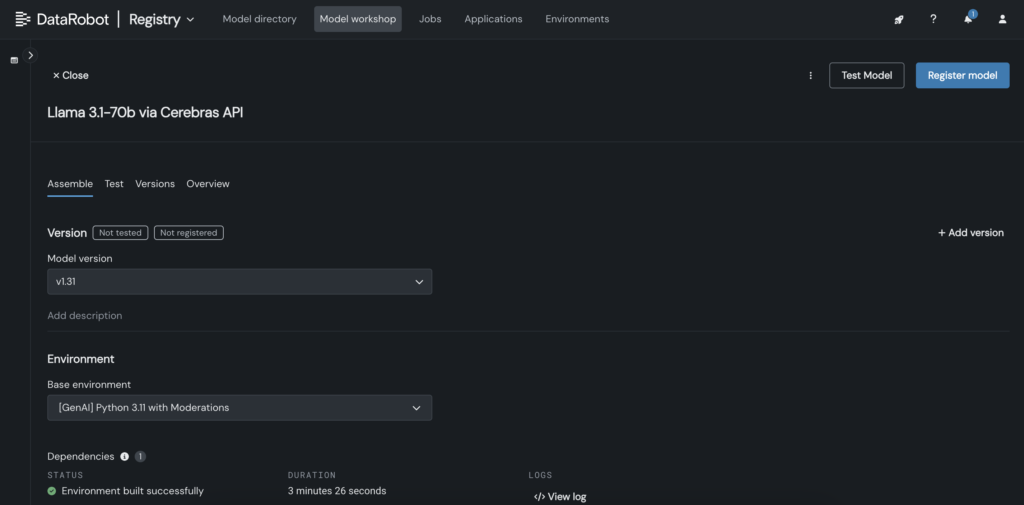

Integration of LLM as Llama 3.1-70B of Cerebras in knowledge robotic lets you customise, check, and deploy AI fashions in just some steps. This course of helps sooner improvement, interactive testing, and higher management over LLM customization.

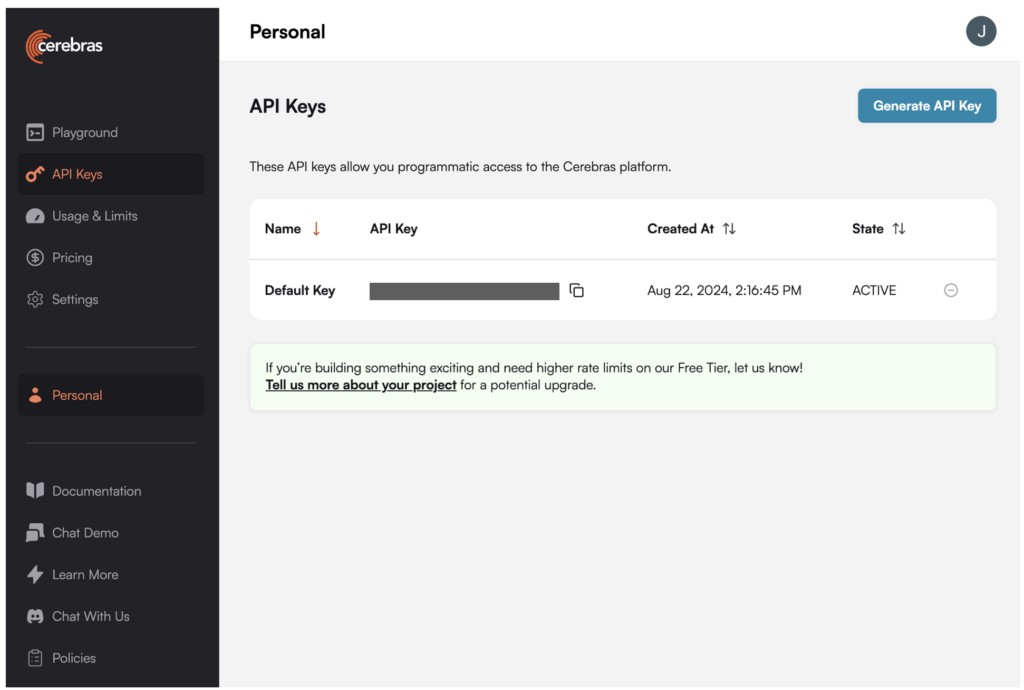

1. Generate an API key for Llama 3.1-70B on the Cerebras platform.

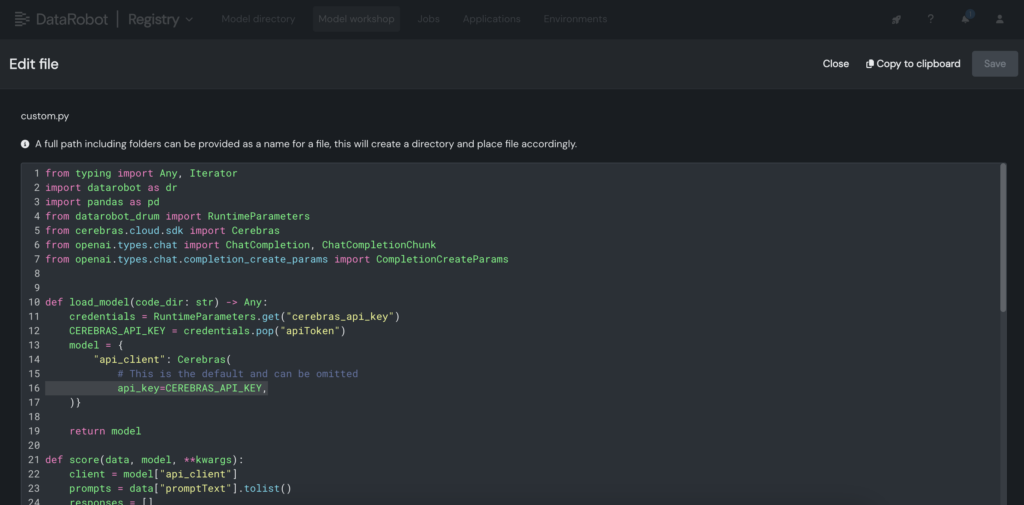

2. In DataRobot, create a customized mannequin in Mannequin Workshop that calls the Cerebras endpoint the place Llama 3.1 70B is hosted.

3. Contained in the customized mannequin, place the Cerebras API key contained in the customized.py file.

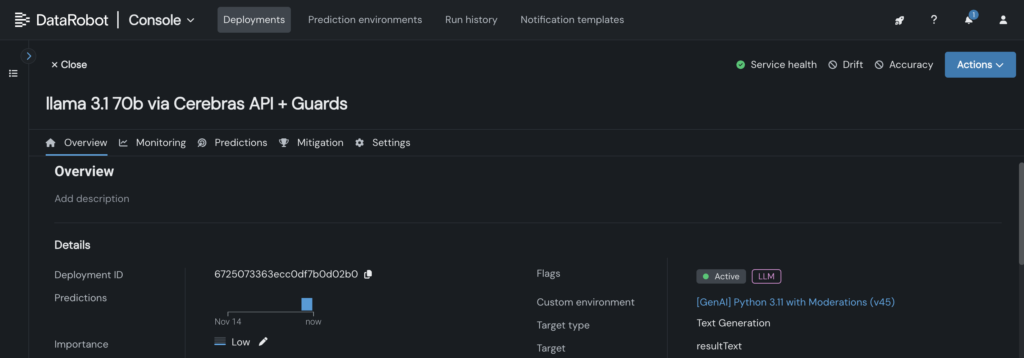

4. Deploy the customized mannequin to an endpoint within the DataRobot console, permitting LLM planes to leverage it for inference.

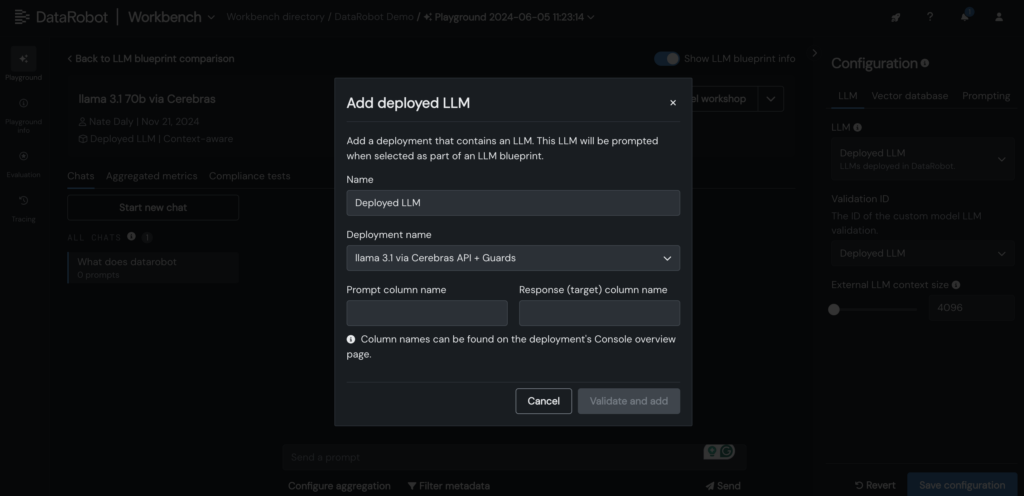

5. Add your deployed Cerebras LLM to the LLM blueprint in DataRobot LLM Playground to start out chatting with Llama 3.1 -70B.

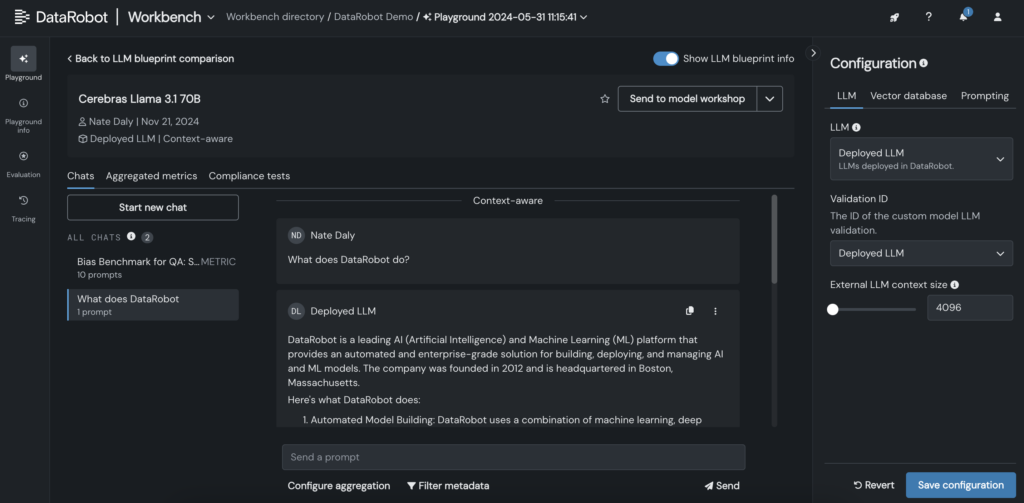

6. As soon as the LLM is added to the blueprint, check the responses by adjusting the request and fetch parameters, and examine the outcomes with different LLMs immediately within the DataRobot GUI.

Push the boundaries of LLM inference to your AI functions

Implementing LLM like Llama 3.1-70B with low latency and real-time responsiveness is just not a straightforward job. However with the proper instruments and workflows, you’ll be able to obtain each.

By integrating LLMs into DataRobot’s LLM Playground and leveraging Cerebras’ streamlined inference, you’ll be able to simplify customization, velocity up testing, and cut back complexity, all whereas sustaining the efficiency your customers count on.

As LLMs develop and turn out to be extra highly effective, having a simplified testing, customization, and integration course of might be important for groups trying to keep forward.

Discover it your self. Entry Mind inferencegenerate your API key and begin constructing AI Functions at DataRobot.

Concerning the creator

Kumar Venkateswar is Vice President of Product, Platform and Ecosystem at DataRobot. He leads product administration for DataRobot’s core companies and ecosystem partnerships, bridging the gaps between environment friendly infrastructure and integrations that maximize AI outcomes. Previous to DataRobot, Kumar labored at Amazon and Microsoft, together with main product administration groups for Amazon SageMaker and Amazon Q Enterprise.

Nathaniel Daly is a Senior Product Supervisor at DataRobot specializing in AutoML and time collection merchandise. Its aim is to ship advances in knowledge science to customers to allow them to leverage this worth to unravel real-world enterprise issues. He has a bachelor’s diploma in Arithmetic from the College of California, Berkeley.