Researchers at Stanford College and UC Berkeley lately introduced the launch of Lotus model 1.0, an open supply session engine designed to make knowledge processing with LLM meals quick, straightforward and declarative. Challenge sponsors say that growing AI purposes with Lotus is as straightforward as writing pandas, whereas offering will increase in efficiency and velocity in comparison with current approaches.

You can not deny the good potential to make use of massive language fashions (LLM) to create AI purposes that may analyze and motive in massive quantities of supply knowledge. In some circumstances, these purposes of AI with LLM meals can comply, and even overcome, human capacities in superior fields, as drugs and legislation.

Regardless of the huge benefit of AI, builders have struggled to construct finish -to -end programs that may take advantage of the central technological advances in AI. One of many nice inconvenience is the shortage of the proper abstraction layer. Whereas SQL is accomplished algebraically for structured knowledge residing in tables, we lack unified instructions to course of unstructured knowledge residing in paperwork.

That is the place Lotus, which represents LLM on unstructured and structured knowledge tables. In a brand new article, entitled “Semantic operators: a declarative mannequin for wealthy evaluation primarily based on the textual content knowledge”, Pc analysis researchers, together with Liana Patel, Sid Jha, Parth Asawa, Melissa Pan, Harshit Gupta and Stanley Chan, discusses their strategy to resolve this nice problem of AI.

Lotus researchers, whom pc legendary report Matei Zaharia, professor at Berkeley CS and Creator of Apache Spark, and Carlos Guestrin, professor and creator of Stanford of Many open supply tasks, As an instance within the article that the event of AI presently lacks “excessive -level abstractions to make semantic bulk queries in Corpus Grandes.” With Lotus, they search to fill that vacancy, beginning with a semantic operators.

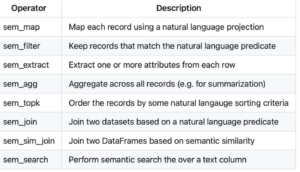

“We introduce the semantic operators, a declarative programming interface that extends the relational mannequin with compound operations primarily based on AI for semantic bulk queries (for instance, filtration, classification, union or registration of information utilizing pure language standards),” they write The researchers. “Every operator may be carried out and optimized in a number of methods, opening a wealthy area for execution plans much like relational operators.”

These semantic operators are packaged in Lotus, the open supply session engine, which may be known as by a Dataframe API. The researchers discovered a number of methods to optimize operators by accelerating frequent operations processing, comparable to semantic filtering, grouping and unions, as much as 400x over different strategies. Lotus consultations coincide or exceed aggressive approaches to construct AI pipes, whereas sustaining or bettering precision, they are saying.

“Like relational operators, semantic operators are highly effective, expressive and may be carried out by quite a lot of AI -based algorithms, opening a wealthy area for execution plans and optimizations beneath the hood”, one of many researchers, Liana Patel, who’s a doctoral scholar at Stanford, says in A publication in X.

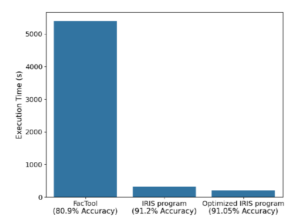

Comparability of state -made verification instruments (Factool) in entrance of a brief lotus (medium) program and the Loto Loto program carried out with declarative optimizations and precision ensures (proper). (Supply: “Semantic operators: A declarative mannequin for wealthy evaluation primarily based on AI on textual content knowledge”)

Lotus semantic operators, which is Accessible for obtain right hereIt implements quite a lot of features so long as structured tables and in unstructured textual content fields. Every of the operators, together with mapping, filtering, extraction, aggregation, the-bys group, classification, unions and searches, are primarily based on algorithms chosen by the Lotus tools to implement the actual operate.

The optimization developed by researchers is just the start of the challenge, since researchers think about all kinds that’s added over time. The challenge additionally helps the creation of semantic indices constructed on the columns of textual content of pure language to speed up session processing.

Lotus can be utilized to develop quite a lot of totally different AI purposes, together with the verification of details, the medical classification of a number of labels, search and classification, and the textual content abstract, amongst others. To exhibit their capability and efficiency, the researchers examined Lotus -based purposes with a number of effectively -known knowledge units, such because the fever knowledge set (reality verification), the biodex knowledge set (for the medical classification of a number of label), The sible Beir (for the seek for search and classification), and the Arxiv file (for textual content abstract).

The outcomes exhibit “the generality and effectiveness” of the lotus mannequin, the researchers write. Lotus agreed or exceeded the precision of the newest era pipes for every process whereas operating as much as 28 × sooner, they add.

“For every process, we discover that Loto applications seize prime quality and state -of -the -art session pipes with low improvement, and that may be mechanically optimized with precision ensures to realize higher efficiency than current implementations,” wrote the researchers On paper.

You possibly can learn extra about Lotus in Lotus-Information.github.io

Associated articles:

Is the common semantic layer the subsequent Massive Information battlefield?

ATSCALE claims the textual content advance to SQL with semantic layer

A dozen questions for Databricks Cto Matei Zaharia