The development of an AI or ML platform from the top typically requires a number of technological layers for storage, evaluation, enterprise intelligence instruments (BI) and ML fashions to investigate information and share studying with business features. The problem is to implement constant and efficient governance controls in numerous elements with totally different gear.

Unity Catalog is the layer of centralized metadata and databricks designed to manage entry to information, security and lineage. It additionally serves as the idea for search and discovery inside the platform. The United Catalog facilitates collaboration between gear by providing sturdy traits reminiscent of roles -based entry management (RBAC), audit trails and information masking, guaranteeing that confidential data is protected with out impeding productiveness. It additionally admits finish -to -end life cycles for ML fashions.

This information will present a normal description and tips on the right way to use Unity catalogs for circumstances for using computerized studying and collaborate between groups by sharing laptop assets.

This weblog publish takes it by the steps for the top cycle of finish to the top of computerized studying with the benefit traits of Unity catalogs in Databricks.

The instance on this article makes use of the info set that accommodates information for the variety of circumstances of the COVID-19 virus by date within the US, with further geographical data. The target is to forecast what number of circumstances of the virus will happen within the subsequent 7 days in the US.

Key options for ML in Databricks

Databricks launched a number of options to have a greater assist for ML with a Unity catalog

Necessities

- The work house have to be enabled for a Catalog of Unity. Work house directors can seek the advice of the doc To point out the right way to allow work areas for a Catalog of Unity.

- You have to use Databricks Runtime 15.4 lts ml or above.

- A piece house administrator should allow the preview of the devoted group clusters utilizing the preview consumer interface. See Handle earlier Databricks views.

- If the work house has enabled the Secure Output Hyperlink Gate, Pypi.org have to be added to the allowed domains listing. See Community coverage administration for output management with out server.

Configure a bunch

To allow collaboration, a account administrator both Work house administrator You might want to configure a bunch by

- Click on your Consumer icon within the higher proper and click on Settings

- Within the “Work house administrator” part, click on “Id and Entry”, then click on “Handle” within the Group Part

- Click on “Add Group”,

- Click on “Add New”

- Enter the group’s identify and click on Add

- Search for your newly created group and confirm that the origin column says “account”

- Click on in your group’s identify within the search outcomes to go to the group particulars

- Click on the “Members” tab and add the specified members to the group

- Click on the “Rights” tab and confirm the rights “entry house entry” and “Databricks SQL Entry”

- If you wish to administer the group from any non -administrative account, you may give entry to the “Supervisor: Supervisor” account to the “Permits” tab “

- Notice: The consumer account have to be a bunch member to make use of teams of teams; Being a bunch administrator will not be sufficient.

Allow teams of devoted teams

The teams of devoted teams are in public prior view, to allow the operate, the work house administrator should allow the operate utilizing The earlier views UI.

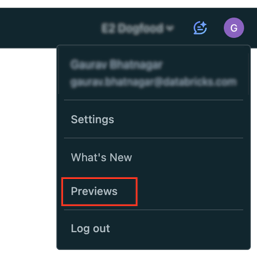

- Click on in your username within the higher bar of the Databricks work house.

- Within the menu, choose earlier views.

- Use alternate to compute: teams of devoted teams to allow or disable earlier views.

Create group laptop

The devoted entry mode is the newest distinctive consumer entry mode model. With the devoted entry, a computing useful resource could be assigned to a single consumer or group, solely that enables the entry of the assigned customers to make use of the computation useful resource.

To create a databricks execution time with ML with

- In your Databricks work house, go to laptop and click on Create Compute.

- Confirm the “computerized studying” within the efficiency part to decide on Databricks Runtime with ML. Select “15.4 Lts” in Databricks Runtime. Choose the specified kinds of situations and the variety of employees as vital.

- Broaden the superior part on the backside of the web page.

- Within the entry mode, click on Guide after which choose Devoted (beforehand: Distinctive Usor) within the drop -down menu.

- Within the Consumer or Single Group area, choose the group you need assigned to this useful resource.

- Configure the opposite desired laptop settings as vital after which click on Create.

After the cluster begins, all group customers can share the identical cluster. For extra particulars, see Finest practices for group group administration.

Information preprocessing by the Dwell Delta (DLT) desk

On this part, we’ll do it

- Learn the unprocessed and save information

- Learn the information of the ingestion desk and use the expectations of Dwell Delta tables to create a brand new desk containing clear information.

- Use clear information as entry to Delta dwell tables that create derived information units.

To configure a DLT pipe, you could have to comply with the permits:

- Use the catalog, navigate the mother and father’s catalog

- All privileges or scheme makes use of, create a materialized view and create desk privileges within the vacation spot scheme

- All privileges or studying quantity and writing quantity within the quantity of vacation spot

- Obtain the info to the quantity: this instance masses information from a Unity catalog quantity.

Substitute

, and With catalog names, scheme and quantity for a unit catalog quantity. The proportioned code tries to create the required scheme and quantity if these objects don’t exist. You have to have the suitable privileges to create and write to things within the Unity catalog. See Necessities. - Create a pipe. To configure a brand new pipe, do the next:

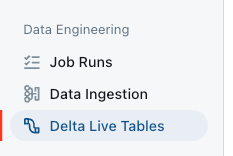

- Within the sidebar, click on Dwell Tables Delta in Information Engineering part.

- Click on Create pipe.

- Within the identify of the pipe, write a novel pipe identify.

- Choose the verification field with out server.

- Within the vacation spot, to configure a Catalog location of Unity the place tables are printed, choose a catalog and a scheme.

- Superior, click on Add configuration after which outline the pipeline parameters for the catalog, the scheme and the quantity to which it downloaded information utilizing the next parameter names:

- My_catalog

- My_schema

- My_volume

- Click on Create.

The pipe consumer interface seems for the brand new pipe. A supply code pocket book is robotically created and configured for the pipe.

- Within the sidebar, click on Dwell Tables Delta in Information Engineering part.

- Declare materialized views and transmission tables. You should utilize Databricks notebooks to interactively develop and validate the supply code for Dwell Delta tables.

- Begin an replace of pipes by clicking on the Begin button on the prime of the pocket book or the DLT consumer interface. The DLT will likely be generated within the catalog and the scheme outlined the DLT

`.. `

MODEL TRAINING ON MATERIALIZED VIEW OF DLT

We’ll launch a prognosis experiment with out server within the materialized view generated from the DLT.

- click on Experiments Within the sidebar in Computerized studying part

- In it Forecast mosaic, choose Begin coaching

- Full configuration types

- Choose the materialized view as coaching information:

`. .covid_case_by_date` - Choose the date because the time column

- Choose the times within the Frequency of prognosis

- Entrance 7 on the horizon

- Choose circumstances within the goal column in Prediction part

- Choose Mannequin registration as

`. ` - Click on Begin coaching To start out the forecast experiment.

- Choose the materialized view as coaching information:

After the coaching is accomplished, the outcomes of the prediction are saved within the specified delta desk and the very best mannequin is recorded within the United Catalog.

On the experiments web page, you select between the next steps:

- Choose View predictions to see the forecast outcomes desk.

- Choose the Lot Inference Pocket book to open an computerized generated pocket book for batches utilizing the very best mannequin.

- Choose create finish service level to implement the very best mannequin in a mannequin completion mannequin.

Conclusion

On this weblog, we now have explored the top -to -end technique of configuration and coaching of prognosis fashions in Databricks, from information preprocessing to mannequin coaching. By profiting from Unity catalogs, teams of teams, the Dwell Delta desk and the automated prognosis, we have been capable of optimize the event of fashions and simplify the collaborations between groups.