In collaboration with Microsoft and Nvidia, we have now built-in NVIM NIM and NVIDIA Agentiq Toolkit microservices in Azure Ai Foundry, which unlocks effectivity, efficiency and optimization of unprecedented prices for his or her AI initiatives.

I’m excited to share an important leap in the way in which we develop and implement ia. In collaboration with NVIDIA, we have now built-in the NIM NIM and NVIDIA AGENTIQ TOOLKIT microservices in AI AI FUNDITION—Coacting effectivity, efficiency and optimization of unprecedented prices for his or her AI initiatives.

A brand new period of effectivity of AI

In right this moment’s digital rhythm panorama, the AI utility scale requires greater than solely innovation; It requires simplified processes that provide quick market time with out compromising efficiency. With the enterprise initiatives that usually take 9 to 12 months to maneuver from conception to manufacturing, every effectivity acquire counts. Our integration is designed to vary that by simplifying every step of the life cycle of the event of AI.

Nvidia Nim in Azure Ai Foundry

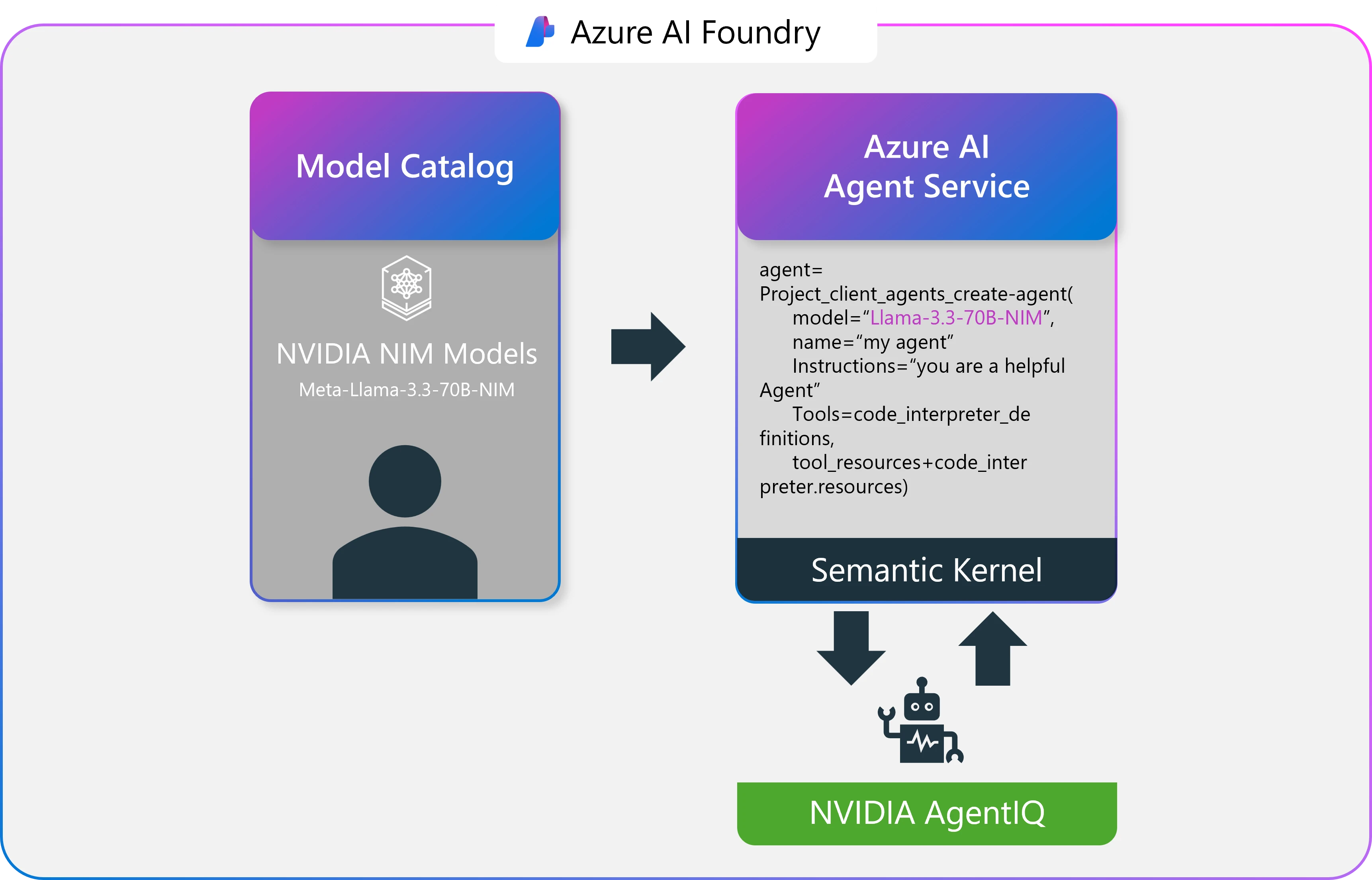

Nvidia Nim ™, a part of the Nvidia AI Enterprise software program suite, is a set of simple -to -use microservices designed for protected, dependable and excessive -performance inferences. Benefiting from sturdy applied sciences corresponding to Nvidia Triton Inference Server ™, Tensorrt ™, Tensorrt-LLM and Pytorch, NIM microservices are constructed to climb with out issues within the calculation of Azure administered.

They supply:

- Zero configuration implementation: Stand up rapidly with the optimization of lists to make use of.

- Azure integration with out seams: He works effortlessly with the AI Azure brokers and the semantic nucleus.

- Enterprise diploma reliability: Profit from the Nvidia AI enterprise assist for steady efficiency and security.

- Scalable inference: Benefit from Azure Nvidia’s accelerated infrastructure for demanding workloads.

- Optimized workflows: Speed up purposes starting from massive language fashions to superior evaluation.

Implementing these providers is easy. With just a few clicks, both by deciding on fashions such because the call-3.3-70b-nim or others of the catalog of fashions in Azure Ai Foundry, you may combine them instantly into their AI work flows and begin constructing generative purposes that work with out issues throughout the Azure ecosystem.

Efficiency optimization with agentiq nvidia

As soon as its NIM Microservices are applied, Nvidia Agentiq takes the middle of the stage. This open supply software equipment It’s designed to attach, define and optimize the AI brokers gear, permits their programs to be executed in most efficiency. Agentiq presents:

- Profile and optimization: Benefit from actual -time telemetry to regulate the location of the AI agent, decreasing latency and calculate the overload.

- Dynamic inference enhancements: Gather and repeatedly analyze metadata, corresponding to predicted tokens per name, estimated time to the next anticipated token inference and lengths, to dynamically enhance agent’s efficiency.

- Integration with semantic nucleus: Direct integration with Azure AI Foundry Agent Service additional facilitates its brokers with improved semantic reasoning and duties execution.

This clever profile not solely reduces laptop prices, but additionally will increase precision and response capability, so that every a part of its agent’s workflow is optimized for achievement.

As well as, we are going to quickly be integrating the Reasoning Mannequin Nemotron Nemotron Nvidia Llama. Nvidia calls Nemotron Cause is a strong household of AI fashions designed for superior reasoning. In line with Nvidia, Nemotron stands out for coding, advanced arithmetic and scientific reasoning, whereas understanding the consumer’s intention and instruments known as with out issues corresponding to search and translations to carry out duties.

Actual world affect

Business leaders are already witnessing the advantages of those improvements.

Drew McCombs, vp of Cloud and Analytics in Epic, stated:

The launch of NVIDIA NIM microservices in Azure AI Foundry presents a protected and environment friendly approach for Epic to show fashions of open supply generative that enhance affected person care, improve the effectivity of the physician and operational, and uncover new concepts to spice up medical innovation. In collaboration with UW Well being and UC San Diego Well being, we’re additionally investigating strategies to judge scientific summaries with these superior fashions. Collectively, we’re utilizing the newest synthetic intelligence know-how in order that the lives of docs and sufferers actually enhance.

Epic’s expertise underlines how our built-in answer can enhance transformative change, not solely in medical care, however in all industries the place excessive efficiency AI is a sport change. As Jon Sigler, EVP, Platform and AI in Servicenow stated:

This mix of the AI Servicenow platform with Nvidia Nim and Microsoft Azure Ai Foundry and Azure Ai Agent Service helps us take the precise AI brokers of the market trade, and accelerations of AI, delivering full -fashioned AI options to assist resolve issues sooner, supply nice buyer experiences and speed up enhancements in organizations and organizations of organizations.

Unlock innovation with AI

By combining the Robusta Nvidia Nim implementation capabilities with the dynamic optimization of Nvidia Agentiq, Azure AI Foundry offers a key answer for the development, implementation and scale of enterprise agent purposes. This integration can speed up IA implementations, enhance agent’s workflows and cut back infrastructure prices, permitting you to focus on what actually issues: enhance innovation.

Able to speed up your AI journey?

Implement NVIDIA NIM microservices and Optimize your AI brokers With Nvidia agentiq toolkit in AI AI FUNDITION. Discover extra in regards to the AIRE AI Foundry Catalog of fashions.

Let’s construct a better, sooner and extra environment friendly future collectively.