(Lightspring/Shutterstock)

Earlier than diversifying into information governance, Alation made its mark as an information catalog firm, a class it’s credited with creating. And with in the present day’s launch of AI Governance, the Silicon Valley agency is increasing as soon as once more, this time providing instruments to manipulate the circulation of knowledge in AI environments.

Because the explosion of generative AI continues, organizations are discovering that the expertise comes with dangers and rewards. For instance, customers can add delicate or personally identifiable information to giant language fashions (LLMs) operating within the cloud. Confidential or copyrighted information might discover its manner into responses generated by LLMs. And LLMs tend to manufacture responses that blow your thoughts and generate biased or poisonous content material.

These dangers (amongst others) are driving the creation of rules, such because the EU AI Regulation, to set limitations round what’s and isn’t permitted. Organizations are struggling to determine learn how to management their AI actions in a number of completely different areas, together with their GenAI-related information streams.

With its new AI governance resolution, Alation focuses on some facets of AI governance, however not all. In line with Satyen Sangani, co-founder and CEO of the corporate, AI governance is primarily aimed toward figuring out the info concerned in AI coaching and inference, the place that information comes from, and what individuals and makes use of are concerned in these flows. of AI information. .

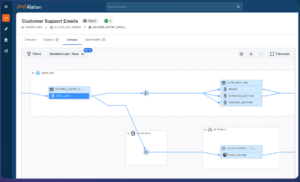

AI Governance tracks the info concerned in AI initiatives, in addition to the AI fashions, individuals concerned, and use circumstances (Picture courtesy of Alation)

“I believe everybody wants to know the place these fashions come from, what fashions they’ve, how they’re leveraged, and what rules would apply to them,” Sangani says. BigDATAwire in an interview. “(AI governance) offers you the power to trace every part you want to have the ability to be sure you’re operating a compliant, low-risk AI operation.”

AI Governance builds on Alation’s current metadata-driven cataloging resolution and leverages its tag-based monitoring system to permit prospects to trace the lineage of knowledge that’s transformed to LLM, in addition to what information is used for adjustment and restoration. augmented technology (RAG). If a buyer would not have already got Alation’s information catalog, one is applied as a part of AI Governance, Sangani says.

Prospects mustn’t look to AI Governance to trace how AI fashions themselves change over time. “We’re not monitoring any explicit model of an LLM or attempting to speak concerning the variations,” Sangani says. “What we’re discovering is that prospects are deploying GPT 3.5, 4, Strawberry, and now they’re attempting to say, okay, here is the info I am providing you with, the merchandise I am giving this data to, and right here There are people who find themselves doing it.”

The GenAI revolution got here so quick that even this fundamental data will not be tracked anyplace, which is why Alation is constructing it. Their strategy is to construct a conceptual mannequin of an AI mannequin that may be rapidly referenced to get a way of how an AI mannequin interacts with a corporation’s information, Sangani says.

Alation leverages metadata monitoring functionality to trace information flows in GenAI functions. Alation discovers which file system a corporation makes use of to retailer unstructured name logs used to coach a customer support chatbot, for instance. It additionally tracks which LLM created embeddings from that information and which vector database is used to retailer these embeddings. The software program then tracks how all of this modifications over time.

Alation Governance helps to handle completely different variations of concern, says Sangani.

“So one model of the concern is that each one these rules are coming. I actually do not know what to do about it, and I actually do not know what I must show to you” that the GenAI app is kosher. “There’s one other model of that that claims, I do not even know what I’ve, so I can know what I want to perform.”

Even in case you have the stock of use circumstances and initiatives, the subsequent query is, at what stage of improvement or deployment is the GenAI utility? Prospects might have a good suggestion of what’s in pilot versus what has been deployed to manufacturing, however monitoring information utilization all through that DevOps journey is one other problem, Sangani says.

“How do I really reproduce that data?” he says. “I believe it is not essentially that individuals have unhealthy intentions, however these information landscapes are actually difficult and it is not essentially clear the way it happened.”

Monitoring information pipelines was troublesome sufficient when information was primarily created and consumed in deterministic functions, Sangani says. Once you add within the probabilistic nature of GenAI functions, the confounding issue begins to turn out to be an actual problem. Whereas the area is maturing rapidly, there are actual frustrations, he says. “So it is a enjoyable sport.”

Associated articles:

Alation turns to GenAI to automate information governance duties

Knowledge tradition report: extra funding wanted, says Alation

Alation launches into information governance