(Logvin Artwork/Shuttersock)

The generative revolution of AI is to rebuild the connection of firms with computer systems and prospects. Tons of of billions of {dollars} are being invested in giant language fashions (LLM) and AI agent, and Billones are at stake. However Genai has a major downside: the tendency of the LLM when hallucinating. The query is: is that this a deadly defect, or can we do it round you?

When you have labored quite a bit with LLM, it’s probably that you’ve skilled a hallucination of AI, or what some confuse. The AI fashions represent issues for quite a lot of causes: inaccurate, incomplete or biased coaching knowledge; ambiguous indications; lack of true understanding; context limitations; and an inclination to generalize in extra (mannequin overloader).

Typically, LLMS hallucinate with none good cause. Vocara CEO AMR Awadallah says that LLMs are topic to the restrictions of knowledge compression within the textual content expressed by Shannon’s info theorem. Since LLMS compresses the textual content past a sure level (12.5%), they enter what known as “loss compression zone” and lose the proper withdrawal.

That leads us to the inevitable conclusion that the tendency to fabricate just isn’t a mistake, however a attribute of one of these probabilistic programs. What can we do then?

Counteract hallucinations of AI

Customers have created a number of strategies to manage or hallucinations, or not less than to counteract a few of their destructive impacts.

To begin, you will get higher knowledge. AI fashions are pretty much as good as the information wherein they’re educated. Many organizations have raised considerations about bias and high quality In your knowledge. Whereas there are not any simple corrections to enhance knowledge high quality, prospects who dedicate sources to higher knowledge and governance could make a distinction.

Customers can even enhance the standard of the LLM response by offering higher indications. The Quick Engineering discipline has arisen to satisfy this want. Customers can even “land” the response of their LLM offering a greater context by restoration era strategies (RAG).

As a substitute of utilizing a LLM of basic use, the open supply adjustment in smaller units of particular area knowledge or business can even enhance accuracy inside that area or business. Equally, a brand new era of reasoning fashions, similar to Deepseek-R1 and Openai O1, that are educated in particular area knowledge units, embrace a suggestions mechanism that enables the mannequin to discover other ways to reply a query, the so-called “reasoning” steps.

Implementing railings is one other method. Some organizations use a second mannequin of AI specifically designed to interpret the outcomes of the first LLM. When a hallucination is detected, you possibly can modify the enter or the context till the outcomes flip clear. Equally, retaining a human within the circuit to detect when a llm of the rails can be directed can even assist keep away from among the worst manufacture of LLM.

ARIUCATION RATES

When Chatgpt got here out for the primary time, his hallucination charge It was round 15% to twenty%. The excellent news is that the hallucination charge appears to be lowering.

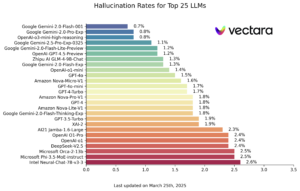

For instance, the neighborhood of hallucination chief of Vectara makes use of the Hughes hallucination evaluation mannequin, which calculates the probabilities of an exit being true or false in a variety of 0 to 1. The Vaccar A hallucination board at the moment exhibits a number of LLM with hallucination charges under 1%, directed by Google Gemini-2.0 flash. That could be a nice type of enchancment a yr in the pastWhen the neighborhood classification desk confirmed that the principle LLMs had hallucination charges of round 3% to five%.

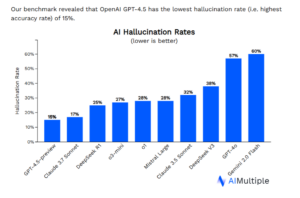

Different hallucinations measures don’t present the identical enchancment. The analysis arm of Aimultiple Benchmarked 9 LLM within the means to recollect info from CNN articles. He Llm of finest rating It was GPT-4.5 preview with a 15percenthallucination charge. Gemini-2.0 of Google Flash at 60%.

“LLM hallucinations have lengthy -range results that go far past small errors,” wrote the principle analyst of Aimultiple Cem Dilmegani in A weblog put up of March 28. “The exact info produced by a LLM might end in authorized ramifications, particularly in regulated sectors similar to medical care, finance and authorized companies. Organizations could possibly be severely penalized if hallucinations brought on by generative AI result in destructive infractions or penalties.”

Excessive -risk ai

An organization that works in order that AI can be utilized for some excessive -risk use instances is the search firm Pearl. The corporate combines a search engine with AI together with human expertise in skilled companies to attenuate the probabilities of hallucination to succeed in a consumer.

Pearl has taken measures to attenuate the hallucination charge in its search engine with AI, that the CEO of Pearl, Andy Kurtzig, mentioned it’s 22% extra exact than Chatgpt and Gemini out of the field. The corporate does it utilizing normal strategies, together with a number of fashions and railings. Past that, Pearl has employed 12,000 discipline specialists similar to medication, legislation, automobile restore and pet well being that may present a fast verification of sanity within the responses generated by AI to additional enhance the precision charge.

“Then, for instance, when you’ve got a authorized downside or a medical downside or an issue together with your pet, you’ll begin with AI, you’re going to get an AI response by our superior high quality system,” Kurtzig instructed Bigdatawire. “And then you definately would get the flexibility to should acquire a verification of an professional in that discipline, after which you possibly can take it one step additional and discuss with the professional.”

Kurtzig mentioned there are three unresolved issues round AI: the persistent downside of AI hallucinations; rising threat of fame and monetary; and failed enterprise fashions.

“Our estimate of the state of artwork is roughly a 37% hallucination stage in skilled companies classes,” Kurtzig mentioned. “In case your physician had an accurate 63%, I might not solely be indignant, I might be demanding them for negligence. That’s horrible.”

The large and wealthy synthetic intelligence firms are operating an actual monetary threat by publishing AI fashions which are liable to hallucinar, Kurtzig mentioned. The appointment A requirement for Florida Filed by the dad and mom of a 14 -year -old boy who dedicated suicide when a chatbot of Aia steered.

“When you’ve gotten a system that’s hallucinating in any case, and you’ve got very deep pockets on the different finish of that equation … and persons are utilizing it and belief, and these LLM are giving these very secure solutions, even when they’re utterly improper, you’ll finish with calls for,” he mentioned.

Returns of the lowering

Anthrope’s CEO was not too long ago information when he mentioned that 90% of the coding work could be carried out by the months of months. Kurtzig, who makes use of 300 builders, doesn’t see that occurs quickly. The true productiveness income are between 10% and 20%, he mentioned.

It’s assumed that the mix of reasoning fashions and AI brokers is asserting a brand new period of productiveness, to not point out a rise of 100 instances in inference workloads to occupy all these NVIDIA GPUs, in accordance with the CEO of Nvidia, Jensen Huang. Nonetheless, though reasoning fashions similar to Deepseek can work extra effectively than Gemini or GPT-4.5, Kurtzig doesn’t see them rising the state of the method.

“They’re reaching lowering returns,” Kurtzig mentioned. “So, each new high quality proportion is absolutely costly. It’s many GPU. An information supply I noticed of Georgetown He says one other 10percentimprovement will price $ 1 billion. ”

Finally, AI can bear fruit, he mentioned. However there can be sufficient ache earlier than reaching the opposite aspect.

“These three basic issues are huge and never resolved, and they’re going to make us go to the channel of disappointment within the cycle of exaggeration,” he mentioned. “High quality is an issue, and we’re reaching lowering yields. Threat is an enormous downside that’s simply starting to emerge. There’s a actual price there each in human life and in cash. After which these firms usually are not incomes cash. Nearly all are dropping cash for the fist.

“We obtained that we obtained a disappointment channel to move,” he added. “There’s a lovely productiveness plateau on the different finish, however we’ve not but reached the channel of disappointment.”

Associated articles:

What are the reasoning fashions and why ought to it matter?

Spies Rag will see as an answer to LLM FIBS and Limitations of Shannon theorem

Hallucinations, plagiarism and chatgpt