Within the quickly evolving panorama of Generative AI (GenAI), information scientists and AI creators are continuously looking for highly effective instruments to create modern purposes utilizing giant language fashions (LLM). DataRobot has launched a set of superior LLM evaluation, testing and evaluation metrics to its Playground, providing distinctive capabilities that set it other than different platforms.

These metrics, together with constancy, correctness, citations, Rouge-1, value, and latency, present a complete, standardized strategy to validating the standard and efficiency of GenAI purposes. By leveraging these metrics, clients and AI creators can develop dependable, environment friendly, high-value GenAI options with better confidence, accelerating their time to market and gaining a aggressive benefit. On this weblog publish, we’ll dive deeper into these metrics and discover how they may help you unlock the complete potential of LLMs inside the DataRobot platform.

Exploring complete analysis metrics

DataRobot’s Playground provides a complete set of analysis metrics that permit customers to benchmark, examine efficiency, and rank their restoration augmented era (RAG) experiments. These metrics embrace:

- Constancy: This metric evaluates how precisely the responses generated by the LLM replicate the information obtained from the vector databases, guaranteeing the reliability of the knowledge.

- Accuracy: By evaluating the generated responses to the bottom reality, the correctness metric evaluates the accuracy of the LLM outcomes. That is significantly priceless for purposes the place accuracy is important, corresponding to in healthcare, finance, or authorized fields, because it permits clients to belief the knowledge supplied by the GenAI utility.

- Quotes: This metric tracks the paperwork retrieved by the LLM when the vector database is requested, offering details about the sources used to generate the responses. It helps customers be sure that their utility leverages essentially the most applicable sources, enhancing the relevance and credibility of generated content material. Playground guard fashions may help confirm the standard and relevance of citations utilized by LLMs.

- Purple-1: The Rouge-1 metric calculates the unigram overlap (every phrase) between the generated response and the paperwork retrieved from the vector databases, permitting customers to judge the relevance of the generated content material.

- Price and latency: We additionally present metrics to trace the fee and latency related to operating the LLM, permitting customers to optimize their experiments for effectivity and cost-effectiveness. These metrics assist organizations discover the fitting stability between efficiency and finances constraints, guaranteeing the viability of deploying GenAI purposes at scale.

- Guard fashions: Our platform permits customers to use DataRobot Registry safety fashions or customized fashions to judge LLM responses. Fashions corresponding to toxicity and PII detectors will be added to the playground to judge every LLM consequence. This lets you simply take a look at guard fashions in LLM responses earlier than deploying them to manufacturing.

Environment friendly experimentation

DataRobot’s Playground permits AI clients and builders to freely experiment with totally different LLMs, chunking methods, integration strategies, and prompting strategies. Analysis metrics play an important position in serving to customers effectively navigate this experimentation course of. By offering a standardized set of analysis metrics, DataRobot permits customers to simply examine the efficiency of various LLM configurations and experiments. This enables AI clients and creators to make data-driven choices by choosing the right strategy for his or her particular use case, saving time and assets within the course of.

For instance, by experimenting with totally different sharding methods or integration strategies, customers have been in a position to considerably enhance the accuracy and relevance of their GenAI purposes in real-world situations. This stage of experimentation is essential to creating high-performance GenAI options tailor-made to particular business necessities.

Optimization and consumer suggestions

Playground analysis metrics act as a priceless instrument to judge the efficiency of GenAI purposes. By analyzing metrics like Rouge-1 or citations, clients and AI creators can determine areas the place their fashions will be improved, corresponding to enhancing the relevance of generated responses or guaranteeing that the appliance leverages essentially the most applicable sources in databases. vector. These metrics present a quantitative strategy to judge the standard of the responses generated.

Along with analysis metrics, DataRobot’s Playground permits customers to offer direct suggestions on generated responses by means of thumbs up or thumbs down rankings. These consumer feedback are the first technique of making a tuning information set. Customers can evaluate the responses generated by the LLM and vote on their high quality and relevance. The voted solutions are then used to create an information set to fine-tune the GenAI utility, permitting it to be taught from consumer preferences and generate extra correct and related solutions sooner or later. Which means customers can gather as a lot suggestions as they should create a whole, fine-tuning information set that displays real-world consumer preferences and necessities.

By combining analysis metrics and consumer suggestions, clients and AI creators could make data-driven choices to optimize their GenAI purposes. They’ll use the metrics to determine high-performing responses and embrace them within the tuning information set, guaranteeing that the mannequin learns from one of the best examples. This iterative means of analysis, suggestions, and adjustment allows organizations to repeatedly enhance their GenAI purposes and ship high-quality user-centric experiences.

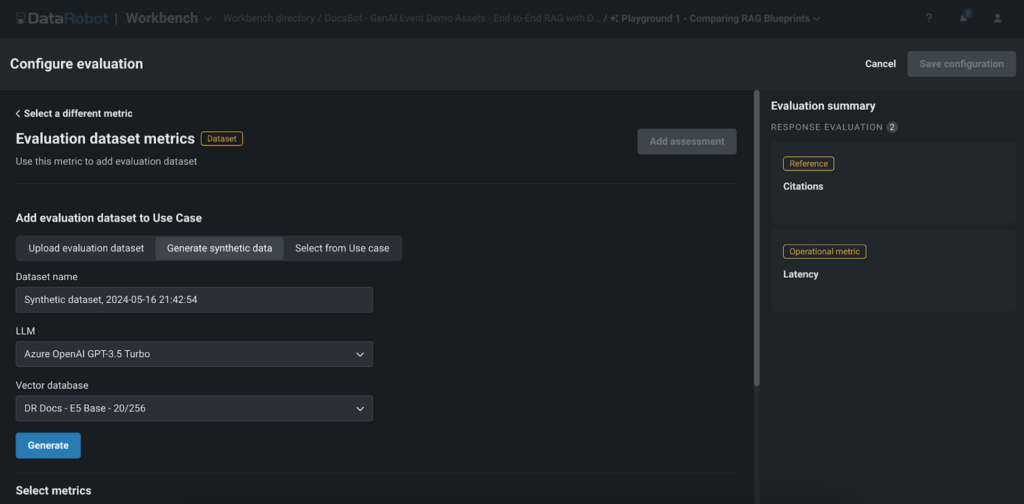

Artificial information era for fast analysis

One of many notable options of DataRobot’s Playground is the era of artificial information for a query and reply analysis. This characteristic permits customers to shortly and effortlessly create question-answer pairs based mostly on the consumer’s vector database, permitting them to comprehensively consider the efficiency of their RAG experiments with out the necessity to manually create information.

Producing artificial information provides a number of key advantages:

- Time financial savings: Manually creating giant information units will be time-consuming. DataRobot’s artificial information era automates this course of, saving priceless time and assets, and enabling clients and AI creators to quickly prototype and take a look at their GenAI purposes.

- Scalability: With the power to generate hundreds of question-answer pairs, customers can completely take a look at their RAG experiments and guarantee robustness throughout a variety of situations. This complete testing strategy helps AI clients and creators ship high-quality purposes that meet the wants and expectations of their finish customers.

- High quality Evaluation: By evaluating the generated responses with the artificial information, customers can simply consider the standard and accuracy of their GenAI utility. This accelerates time to worth for his or her GenAI purposes, enabling organizations to carry their modern options to market sooner and acquire a aggressive benefit of their respective industries.

It is very important notice that whereas artificial information supplies a fast and environment friendly solution to consider GenAI purposes, it could not at all times seize all the complexity and nuance of real-world information. Due to this fact, it’s essential to make use of artificial information together with actual consumer suggestions and different analysis strategies to make sure the robustness and effectiveness of the GenAI utility.

Conclusion

DataRobot’s superior LLM evaluation, testing, and analysis metrics on Playground present AI clients and creators with a robust set of instruments to create environment friendly, dependable, and high-quality GenAI purposes. By providing complete analysis metrics, environment friendly experimentation and optimization capabilities, consumer suggestions integration, and artificial information era for fast analysis, DataRobot allows customers to unlock the complete potential of LLMs and drive significant outcomes.

With better confidence in mannequin efficiency, accelerated time-to-value, and the power to fine-tune their purposes, AI clients and creators can concentrate on delivering modern options that remedy real-world issues and create worth for his or her customers. finals. DataRobot’s Playground, with its superior analysis metrics and distinctive options, is a game-changer within the GenAI panorama, enabling organizations to push the boundaries of what’s attainable with giant language fashions.

Do not miss the chance to optimize your tasks with essentially the most superior LLM testing and evaluation platform out there. Go to DataRobot Playground now and begin your journey in direction of creating superior GenAI purposes that really stand out within the aggressive AI panorama.

In regards to the creator

Nathaniel Daly is a Senior Product Supervisor at DataRobot specializing in AutoML and time sequence merchandise. Its aim is to ship advances in information science to customers to allow them to leverage this worth to resolve real-world enterprise issues. He has a bachelor’s diploma in Arithmetic from the College of California, Berkeley.