As you could have heard Deepseek-R1 You might be doing waves. It’s all through the AI information software program, acclaimed as the primary open supply reasoning mannequin.

The thrill? Nicely deserved.

The mannequin? Highly effective.

Deepseek-R1 represents the present border in reasoning fashions, being the primary open supply model of its type. However right here is the half that won’t see within the headlines: working with him will not be precisely easy.

Prototype creation could be clumsy. Manufacturing implementation? Much more sophisticated.

That is the place Datarobot enters. We make it simpler to develop and implement Deepseek-R1, in order that it could actually spend much less time combating with complexity and extra time creating actual options prepared for the corporate.

Deepseek-R1 prototypes and bringing functions to manufacturing are elementary to reap the benefits of their full potential and ship Generative experiences of upper high quality.

So what makes Depseek-R1 so convincing, and why is it inflicting all this consideration? Let’s take a more in-depth look if all of the hype is justified.

Might this be the mannequin that exceeds the final and finest finest in OpenAI?

Past exaggeration: Why Deepseek-R1 is price it

Deepseek-R1 isn’t just one other generative mannequin. It might be stated that it’s the first “reasoning” mannequin of open supply, a generative textual content mannequin particularly strengthened to generate textual content that approaches its reasoning and determination -making processes.

For AI professionals, that opens new potentialities for functions that require structured exits and promoted by logic.

What additionally highlights is its effectivity. Based on experiences, Deepseek-R1 coaching value a fraction of what was wanted to develop fashions resembling GPT-4O, because of reinforcement studying methods printed by Deepseek AI. And since it’s open supply, it gives higher flexibility whereas permitting you to keep up management over your information.

After all, working with an open supply mannequin as Deepseek-R1 comes with its personal set of challenges, from integration obstacles to efficiency variability. However understanding its potential is step one for it to work successfully in Actual world functions and ship extra related and vital expertise to finish customers.

Use Depseek-R1 in Datarobot

After all, the potential will not be at all times the identical as straightforward. That is the place Datarobot enters.

With Datarobot, you’ll be able to accommodate Depseek-R1 utilizing the NVIDIA GPU for top efficiency inference or entry it by non-server predictions for quick and versatile prototypes, experimentation and implementation.

It would not matter the place Deepseek-R1 is being housed, you’ll be able to combine it with out issues in your workflows.

In apply, this implies which you can:

- Examine the efficiency between the fashions with out the annoyance, utilizing integrated comparative analysis instruments to see how Deepseek-R1 is in comparison with others.

- Implement Deepseek-R1 in manufacturing with confidence, backed by enterprise diploma safety, observability and governance traits.

- Construct AI functions that supply related and dependable outcomes, with out binding with the complexity of infrastructure.

LLM and Deepseek-R1 are hardly ever utilized in isolation. In actual world manufacturing functions, they perform as a part of refined workflows as an alternative of unbiased fashions. With this in thoughts, we consider Depseek-R1 inside a number of pipes of restoration restoration (rag) on the acquaintances Finance Information set and in contrast its efficiency with GPT-4o Mini.

So how is Deepseek-R1 accumulates in actual world’s workflows? That is what we discover:

- Reply time: The latency was remarkably decrease for GPT-4o Mini. The eightieth percentile response time for sooner pipes was 5 seconds for GPT-4o Mini and 21 seconds for Deepseek-R1.

- Accuracy: One of the best generative AI pipe utilizing Deepseek-R1 as Synthesizer LLM reached a 47percentprecision, exceeding the most effective pipe utilizing GPT-4o Mini (43percentprecision).

- Value: Though Deepseek-R1 supplied higher precision, its name per name was considerably increased, roughly $ 1.73 per request in comparison with $ 0.03 for GPT-4o Mini. Lodging choices considerably have an effect on these prices.

Whereas Deepseek-R1 demonstrates spectacular precision, its highest prices and the slower response occasions could make GPT-4o mini essentially the most environment friendly possibility for a lot of functions, particularly when the associated fee and latency are important.

This evaluation highlights the significance of evaluating fashions not solely in isolation but additionally throughout the finish -to -end work flows.

Gross efficiency metrics alone don’t inform the complete story. The analysis of fashions throughout the refined agenic and non -agricultural RAG pipes gives a clearer picture of its actual world viability.

Use the Deepseek-R1 reasoning on brokers

The Deepseek-R1 power isn’t just to generate solutions, it’s how reasoning by advanced situations. This makes it significantly priceless for agent -based techniques that must deal with dynamic use circumstances of a number of layers.

For firms, this reasoning capability goes past merely answering questions. Can:

- Current quite a lot of choices As a substitute of a single “higher” response, serving to customers to discover completely different outcomes.

- Acquire proactive info Earlier than the person’s interactions, which permit extra receptive and acutely aware experiences of the context.

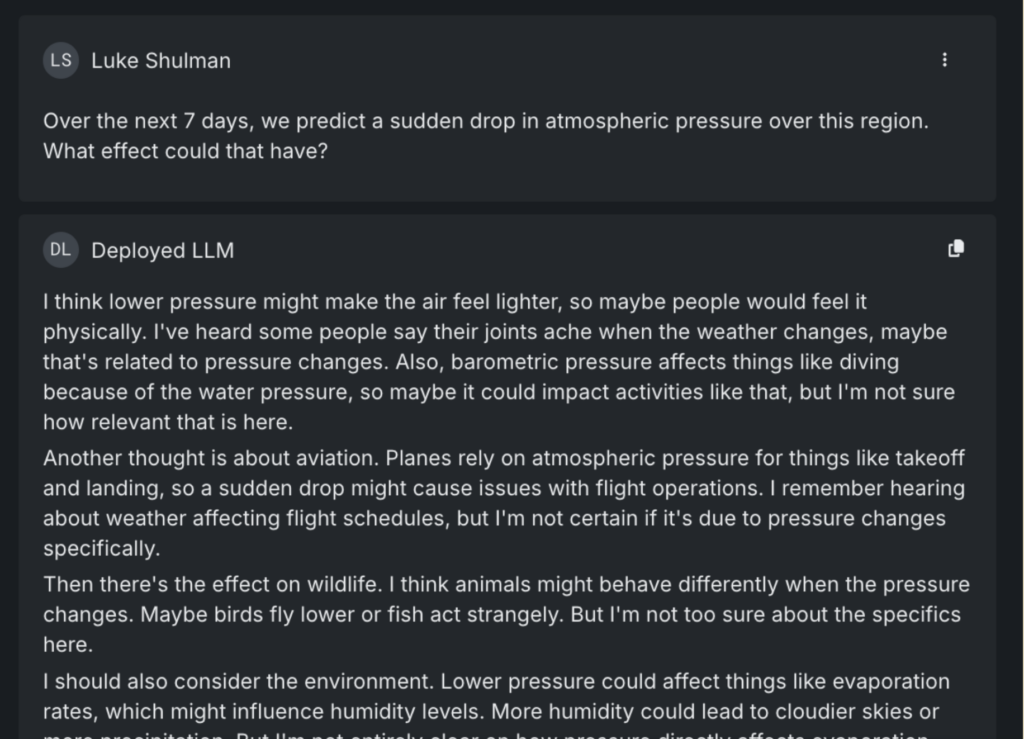

Right here is an instance:

When requested concerning the results of a sudden fall in atmospheric strain, Depseek-R1 not solely gives a textbook response. Determine a number of methods wherein the query might be interpreted, contemplating the impacts on wildlife, aviation and well being of the inhabitants. It even factors out much less apparent penalties, such because the potential for the cancellations of outside occasions as a consequence of storms.

In a system primarily based on brokers, the sort of reasoning could be utilized to the situations of the actual world, resembling proactively verifying flight delays or the subsequent occasions that could be interrupted by climate adjustments.

Curiously, when the identical query was raised to different LLM primary, together with Gemini and GPT-4O, not one of the cancellations of marked occasions as a possible threat.

Deepseek-R1 stands out within the agent promoted by their capability to anticipate, not simply react.

Examine Deepseek-R1 with GPT 4O-mini: what the info inform us

Too usually, AI practitioners rely solely on the responses of a LLM to find out whether it is prepared for deployment. If the solutions sound convincing, it’s straightforward to imagine that the mannequin is prepared for manufacturing. However with out a deeper analysis, that belief could be deceptive, for the reason that fashions that work effectively within the checks usually battle in actual world functions.

That’s the reason combining consultants with quantitative evaluations is key. It isn’t simply what the mannequin says, however the way it will get there, and if that reasoning stays underneath scrutiny.

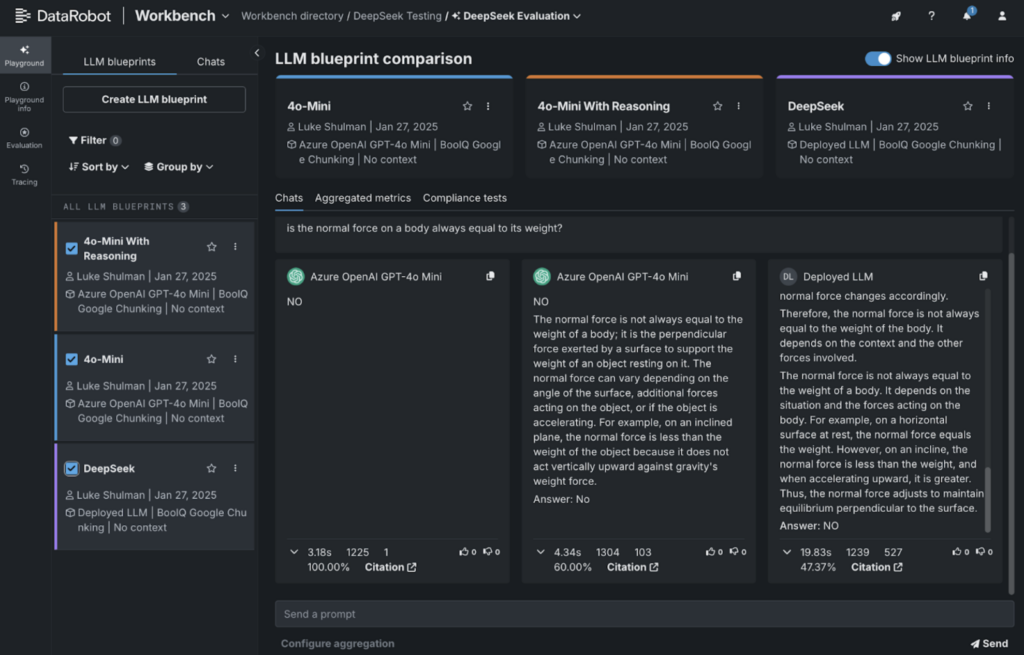

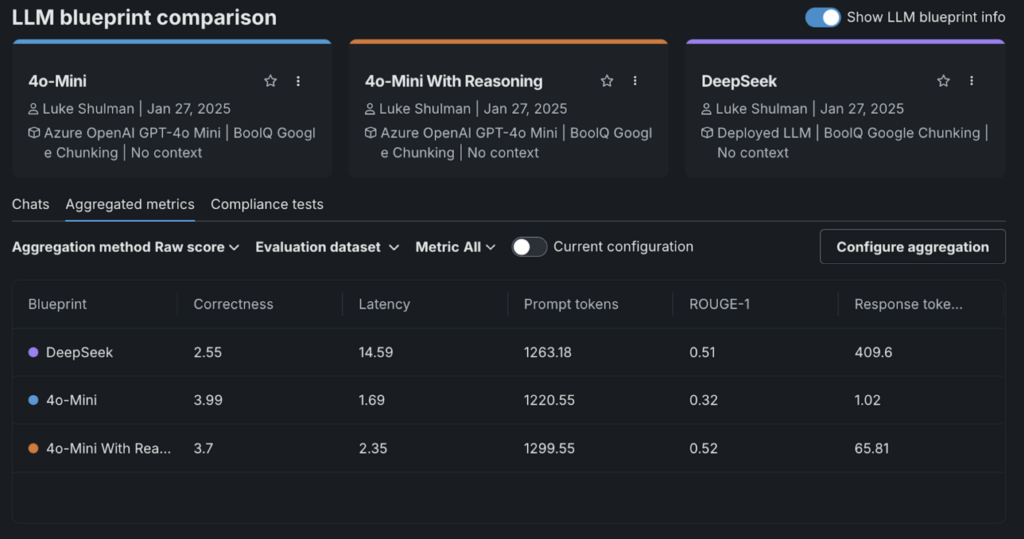

For example this, we carry out a fast analysis utilizing the Google Boolq studying comprehension information set. This information set presents quick passages adopted by questions sure/to not show the understanding of a mannequin.

For GPT-4O-mini, we use the next system message:

Attempt to reply with a transparent sure or no. You can too say true or false, however it’s clear in your response.

Along with your reply, embody your reasoning behind this reply. Connect this reasoning with the label

For instance, if the person asks “what coloration is a can of Coca -Cola,” he would say:

Reply: Pink

That is what we discover:

- Good: Deepseek-R1 the exit.

- On the finish: GPT-4O-mini responding with a easy sure/no.

- Heart: GPT-4O-mini with reasoning included.

We use Datarobot’s integration with the flamendex correction evaluator to qualify the solutions. Curiously, Deepseek-R1 obtained the bottom on this analysis.

What stood out was how one can add “reasoning” triggered the correction scores to be lowered in all areas.

This highlights an vital conclusion: though Deepseek-R1 works effectively at some reference factors, it might not at all times be the most effective for every case of use. That’s the reason it’s important to check fashions subsequent to one another to search out the correct device for work.

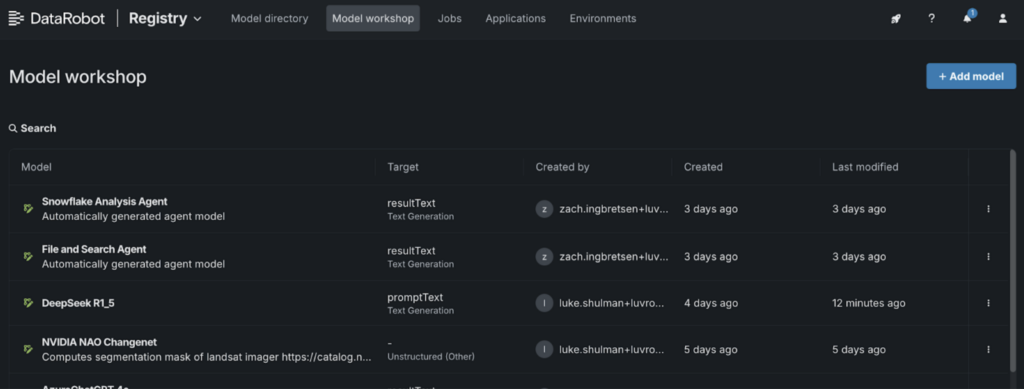

Internet hosting Depseek-R1 in Datarobot: A step-by-step information

Placing Deepseek-R1 in operation doesn’t should be sophisticated. Whether or not you might be working with one of many base fashions (greater than 600 billion parameters) or a distilled model adjusted in smaller fashions resembling call-70b or call-8b, the method is straightforward. You may host any of those variants in Datarobot with just a few configuration steps.

1. Go to Mannequin workshop:

- Navigate to the “registration” and choose the “Mannequin workshop” tab.

2. Add a brand new mannequin:

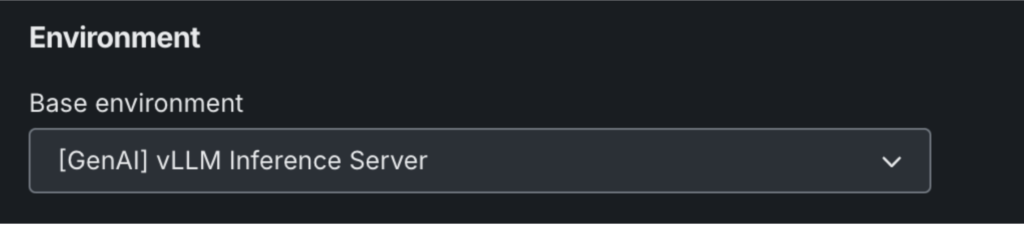

- Identify your mannequin and select “(Genai) Vllm Inference Server” within the configuration of the surroundings.

- Click on “+ Add mannequin” to open the customized mannequin workshop.

3. Configure the metadata of your mannequin:

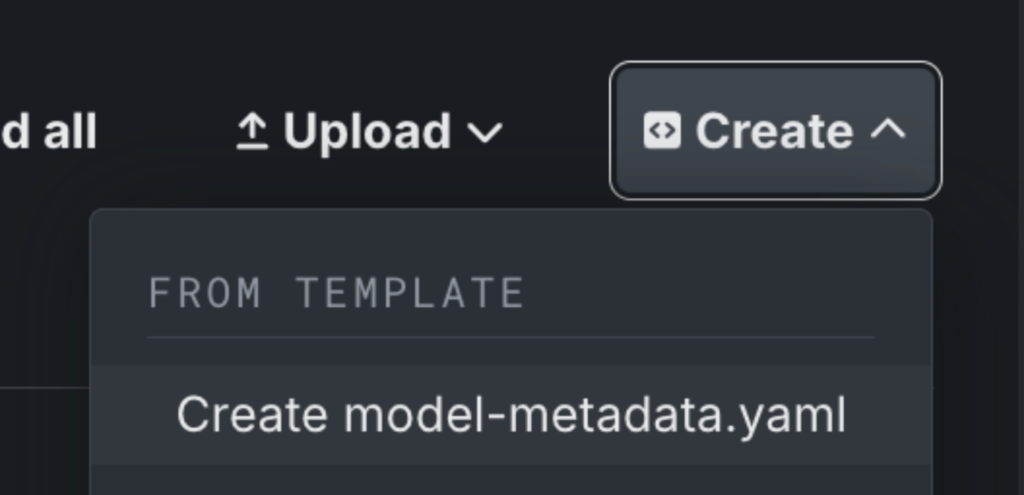

- Click on “Create” so as to add a Mannequin-Metadata.yaml file.

4. Edit the metadata file:

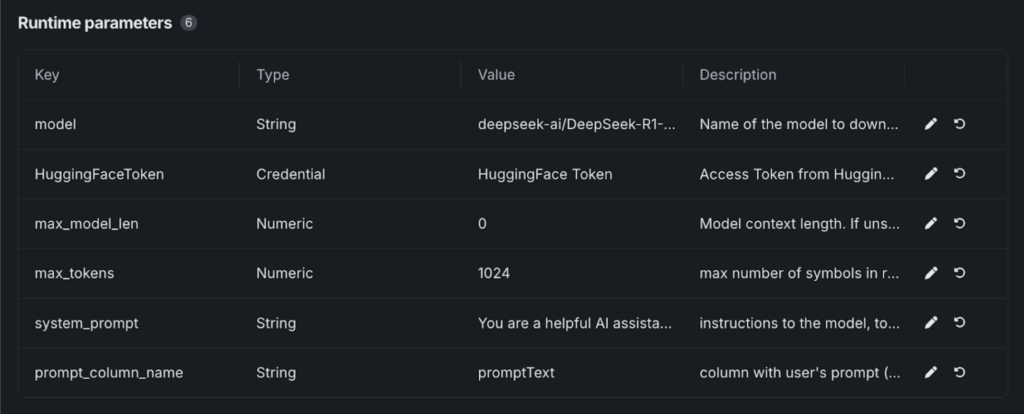

- Save the file and seem “execution time parameters”.

- Paste the required values of our github template, which incorporates all the mandatory parameters to launch the hugged face mannequin.

5. Configure the small print of the mannequin:

- Choose your facial token hugging the Datobot credential warehouse.

- In “Mannequin”, enter the variant you might be utilizing. For instance: Deepseek-Ai/Deepseek-R1-Distill-Llama-8b.

6. Lanked and implement:

- As soon as saved, its Deepseek-R1 mannequin can be executed.

- From right here, you’ll be able to attempt the mannequin, implement it at an finish level or combine it into kids’s parks and functions.

From Deepseek-R1 Ai prepared for the corporate

Accessing to the instruments of avant -garde generative is just the start. The true problem is to judge which fashions conform to its particular use case and Taking them safely to manufacturing to ship an actual worth to its finish customers.

Deepseek-R1 is simply an instance of what could be achieved when you could have the pliability of engaged on the fashions, evaluating their efficiency and deploying them with confidence.

The identical instruments and processes that simplify the work with Depseek may also help you profit from different fashions and functions of energy AI that supply an actual impression.

See how Deepseek-R1 is in comparison with different AI fashions and deployed it in manufacturing with a Free take a look at.

In regards to the creator

Nathaniel Daly is a senior merchandise supervisor in Datarobot, targeted on autom and short-term sequence. He has targeted on bringing advances in information science to customers in order that they will reap the benefits of this worth to unravel the industrial issues of the actual world. He has a title in arithmetic on the College of California, Berkeley.