Find out how Azure and interconnection {hardware} techniques benefit from the Azure Nepappp information for chips growth.

Excessive efficiency pc workloads (HPC) impose important calls for on cloud infrastructure, which require sturdy and scalable sources to deal with advanced and intensive computational duties. These work hundreds typically require excessive ranges of parallel processing energy, usually offered by teams of the Central Processing Unit (CPU) or digital machines primarily based on the Graphics Processing Unit (GPU). As well as, HPC functions require substantial storage of speedy entry knowledge and entry speeds, which exceed the capacities of conventional cloud file techniques. Specialised storage options are required to satisfy the enter/output wants/departure of low efficiency (I/S).

Microsoft Azure Netapp Archives It’s designed to supply low latency, excessive efficiency and enterprise diploma knowledge on scale. The distinctive capabilities of Azure Nepap information make it ample for a number of excessive -performance pc workloads, reminiscent of digital design automation (EDA), seismic processing, deposits simulations and danger modeling. This weblog highlights the differentiated capabilities of Azure Netapp information for EDA workloads and Microsoft’s silicon design journey.

INFRASTRUCTURE REQUIREMENTS OF EDA WORK LOADS

EDA workloads have intensive knowledge processing and knowledge processing necessities to deal with advanced simulation, bodily design and verification duties. Every design stage implies a number of simulations to enhance precision, enhance reliability and detect early design defects, scale back purification and redesign prices. Silicon growth engineers can use extra simulations to check completely different design eventualities and optimize the ability, efficiency and chip space (PPA).

EDA workloads are labeled into two foremost varieties: Diree and Backend, every with completely different necessities for the storage and underlying computation infrastructure. Frontend workloads give attention to the logical design and purposeful elements of chips design and include 1000’s of brief -term parallel works with an I/O sample characterised by ceaselessly frequent readings and writings in hundreds of thousands of small information. Backend’s workloads give attention to translating logical design into bodily design for manufacturing and consists of a whole bunch of works that contain sequential studying/writing of much less largest information.

The selection of a storage answer to adjust to this distinctive mixture of working loading patterns and backend just isn’t trivial. The specs consortium has established the SPEC SFS BENCHMARK To assist with the comparative analysis of the assorted storage options within the trade. For EDA workloads, the Eda_blended reference level gives the attribute patterns of Fronte and Backend workloads. The I/O operations composition is described within the following desk.

| EDA workload stage | Forms of I/O operation |

| Interface | STAT (39%), entry (15%), studying file (7%), random studying (8%), writing file (10%), random writing (15%), different operations (6%) |

| BACKEND | Learn (50%), write (50%) |

Azure Napp information admit common volumes that are perfect for work hundreds reminiscent of databases and basic -use file techniques. EDA workloads work in massive volumes of information and require very excessive efficiency; This requires a number of common volumes. The introduction of huge volumes to confess larger portions of information is advantageous for EDA workloads, because it simplifies knowledge administration and affords greater efficiency in comparison with a number of common volumes.

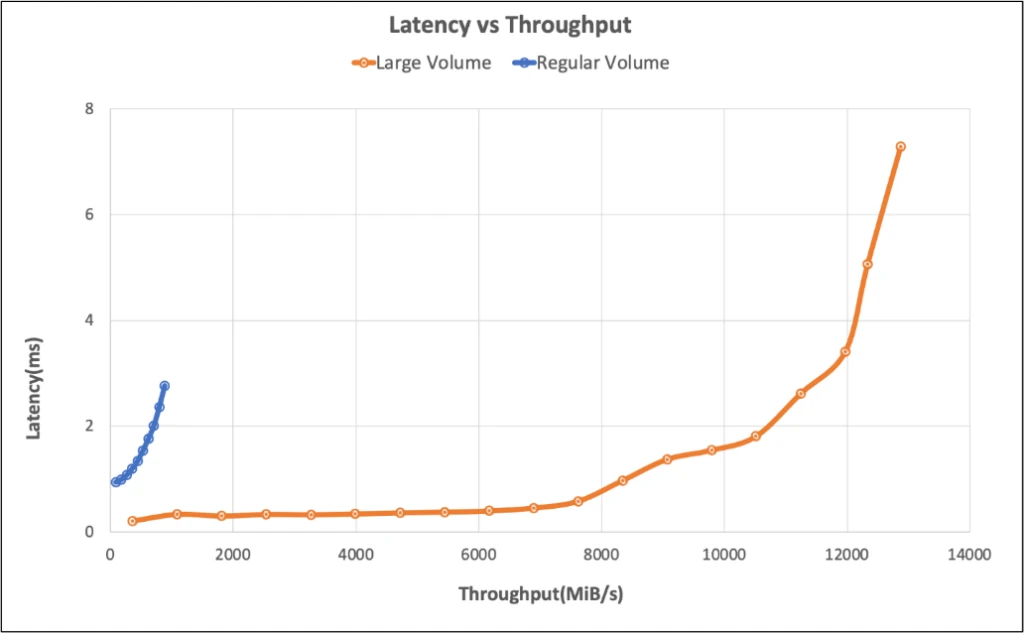

Under is the output of the efficiency take a look at of the SFS EDA_BLEDED BENCHMARK specification that demonstrates that the NetApp information can ship ~ 10 gib/s efficiency with lower than 2 ms latency utilizing massive volumes.

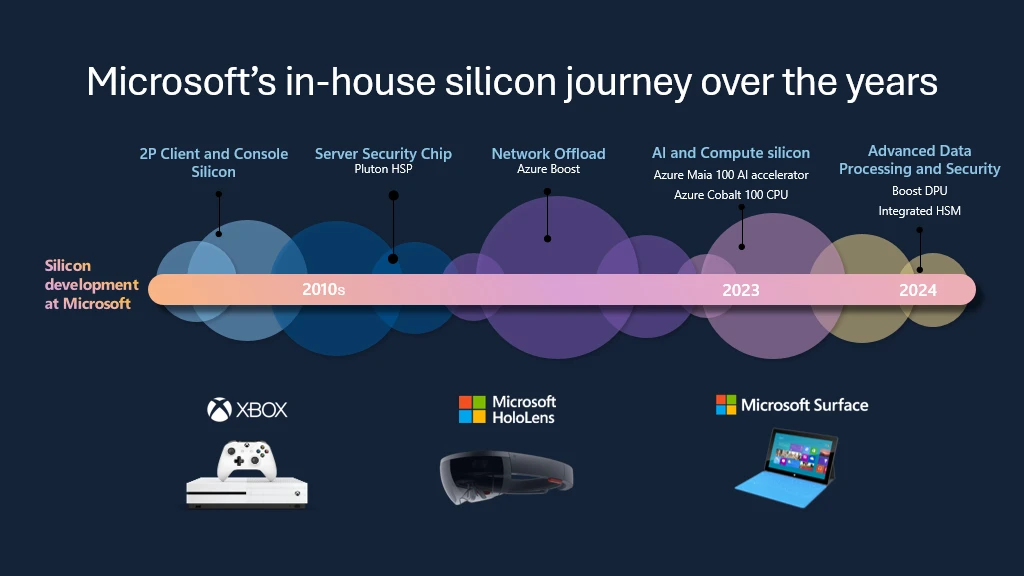

Digital design automation in Microsoft

Microsoft undertakes to permit AI in every workload and expertise for immediately and tomorrow gadgets. Begin with the design and manufacture of silicon. Microsoft is exceeding scientific limits at an unprecedented fee to execute EDA workflows, Pushing the bounds of Moore’s legislation By adopting Azure for our personal chips design wants.

Utilizing the most effective practices mannequin to optimize Azure for chip design between purchasers, companions and suppliers has been essential for the event of a few of the first totally custom-made cloud silicon chips of Microsoft:

- The Azure Maia 100 AI accelerator, optimized for generative and the generative duties.

- The Azure Cobalt 100 CPU, an ARM primarily based processor tailored to execute workloads to calculate basic use in Microsoft Azure.

- The built-in {hardware} safety module of Azure; Microsoft’s latest inner safety chip designed to harden key administration.

- The Azure Enhance DPU, the corporate’s first inner knowledge processing unit designed for work hundreds centered on excessive effectivity and low energy.

Chips developed by the Azure Cloud {hardware} gear are applied on Azure servers that supply higher computing capabilities for HPC workloads and additional speed up the rhythm of innovation, reliability and operational effectivity used to develop Azure manufacturing techniques. By adopting Azure for EDA, the Azure cloud {hardware} workforce enjoys these advantages:

- Fast entry to scalable avant -garde processors at request.

- Dynamic matching of every EDA device to a selected CPU structure.

- Benefiting from Microsoft improvements in applied sciences promoted by AI for semiconductor workflows.

How NetApp Azure information speed up innovation in semiconductor growth

- Superior efficiency: Azure Napp information can ship as much as 652,260 IOP with lower than 2 milliseconds of latency, whereas reaching 826,000 IOP within the efficiency benefit (~ 7 milliseconds of latency).

- Excessive scalability: As EDA advance, the generated knowledge can develop exponentially. The Netapp information present areas of particular person names of nice efficiency and excessive efficiency with Giant volumes of as much as 2pibScale with out issues to assist calculation teams even as much as 50,000 cores.

- Operational simplicity: Azure Napp information are designed to simplify, with a handy consumer expertise via the Azure Portal or via the automation API.

- Profitability effectivity: Azure Netapp Recordsdata affords nice entry To transparently transfer the chilly knowledge blocks to the storage degree of Azure administered for a lowered price, after which robotically return to the new degree in entry. Moreover, Azure Nepapp Recordsdata Reserved Capability It gives important price financial savings in comparison with the fee value per use, additional lowering the excessive prices related to enterprise diploma storage options.

- Safety and reliability: Azure Netapp information present Enterprise Diploma Information Administration, Management aircraftand Information aircraft safety Traits, guaranteeing that the vital EDA knowledge are protected and obtainable with the administration of keys and encryption for resting knowledge and for transit knowledge.

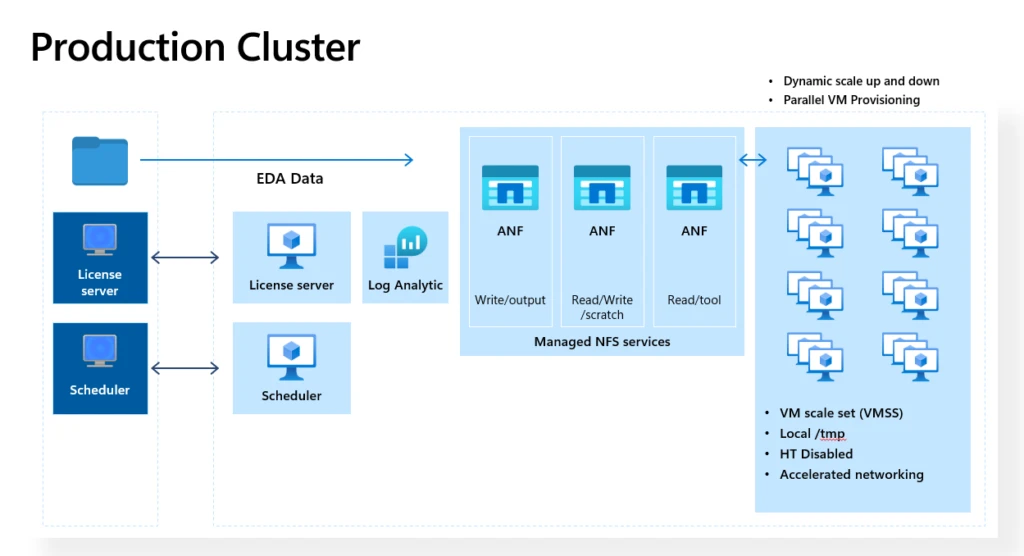

The next graph exhibits an EDA manufacturing cluster applied in Azure by the Azure Cloud {hardware} gear, the place the NetApp archives serve clients with greater than 50,000 cores by cluster.

The Netapp archives present scalable efficiency and reliability that we have to facilitate excellent integration with Azure for a various set of digital design automation instruments utilized in silicon growth.

“Mike Lemus, director, Silicon Improvement Compute Options in Microsoft.”

On the earth of accelerated semiconductor design immediately, Azure Netapp information provide agility, efficiency, safety and stability, the keys to providing silicon innovation for our Azure cloud.

—Silvian Goldenberg, accomplice and basic supervisor of Silicono Design and Infrastructure Methodology in Microsoft.

Get extra details about Azure Netapp information

Azure Napp information have confirmed to be the storage answer chosen for essentially the most demanding EDA workloads. By offering low latency, excessive efficiency and scalable efficiency, Azure Napp information admit the dynamic and complicated nature of EDA duties, guaranteeing speedy entry to avant -garde processors and ideal integration with the Azure HPC answer stack.

Confirm Azure Perspective of the properly architected body within the Azure Netapp archives For detailed data and steerage.

For extra data associated to Azure Netapp information, see the Azure Netapp file documentation right here.