Introduction

Selecting the proper GPU is a important resolution in executing automated studying and LLM workloads. You want sufficient calculation to execute your fashions effectively with out spending an excessive amount of on pointless energy. On this publication, we examine two stable choices: Nvidia’s A10 and the brand new L40s GPUs. We’ll break down your specs, efficiency factors towards LLM and costs that can assist you select based mostly in your workload.

There may be additionally a rising problem within the trade. Nearly 40% of corporations wrestle to manage AI tasks on account of restricted entry to GPUs. Demand is overcoming the availability, which hinders the dimensions reliably. That is the place flexibility turns into essential. Trusting a single cloud or {hardware} supplier can sluggish your tasks. We’ll discover how Clarifai’s Compute Orchestration helps you entry GPU A10 and L40s, supplying you with the liberty to vary based mostly on availability and workload wants whereas avoiding the blockage of the provider.

Let’s immerse ourselves and Check out these two totally different GPU architectures.

Amps GPU (Nvidia A10)

Nvidia Amp The structure, launched in 2020, launched third-generation tensioner facilities optimized for blended precision pc (FP16, TF32, INT8) and improved multi-instance GPU help (MIG). The A10 GPU is designed for the inference of worthwhile, pc imaginative and prescient and workloads with graphics. Effectively manages LLMS of medium measurement, imaginative and prescient fashions and video duties. With the second -generation RT nuclei and the RTX digital workstation help (VWS), the A10 is a stable choice to execute graphics and workloads of AI in virtualized infrastructure.

Ada Lovelace Gpu (Nvidia L40s)

He Ada Lovelace Structure carries extra efficiency and effectivity, designed for contemporary work and graphic workloads. The L40S GPU has fourth -generation tensor facilities with FP8 precision help, which provides vital acceleration for giant LLM, generative and positive adjustment. It additionally provides third technology RT nuclei and AV1 {hardware} coding, which makes it a powerful adjustment for complicated 3D graphics, illustration and media pipes. Lovelace structure permits L40 to be dealt with in a number of load environments the place the calculation of AI and excessive -end graphics are executed subsequent to one another.

A10 vs. L40S: Comparability of specs

Core rely and clock speeds

The L40S presents a better CUDA core Inform that the A10, offering larger parallel processing energy for work masses AI and ML. CUDA nuclei are specialised GPU nuclei designed to deal with complicated parallel calculations, which is crucial to speed up AI duties.

The L40S additionally works with a better impulse clock than 2520 MHz, a 49% improve over the 1695 MHz of the A10, leading to a sooner computing yield.

VRM Capability and Reminiscence Band

The L40S provides 48 GB of VRM, twice the 24 GB of the A10, which permits it to deal with bigger fashions and units extra effectively. Its reminiscence bandwidth can be increased in 864.0 GB/s in comparison with the 600.2 GB/s of the A10, bettering knowledge efficiency throughout reminiscence intensive duties.

A10 vs L40s: efficiency

How are A10 and L40 in contrast in the actual world inference? Our analysis crew in contrast the MINICPM-4B, PHI4-mini-instrumentand Llama-3.2-3b-Instruct fashions Executing in FP16 (medium precision) in each GPUs. FP16 permits sooner efficiency and decrease reminiscence use, good for giant -scale work masses.

We examined latency (the time wanted to generate every token and full a whole software, measured in seconds) and the efficiency (the variety of tokens processed per second) in a number of eventualities. Each metrics are essential to judge LLM efficiency in manufacturing.

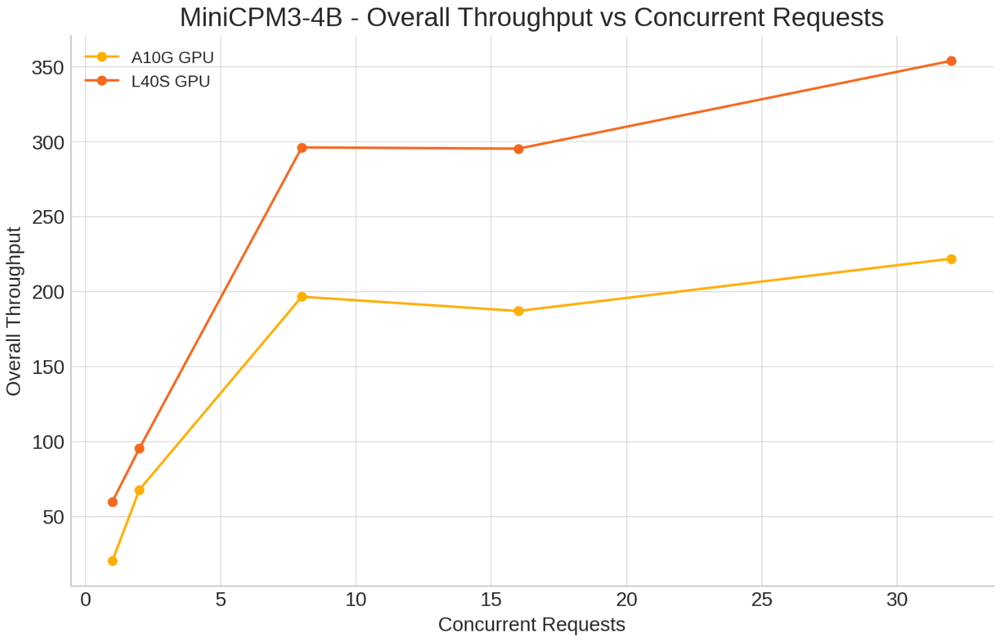

MINICPM-4B

Confirmed eventualities:

- Concurrent requests: 1, 2, 8, 16, 32

- Entry tokens: 500

- Output tokens: 150

Key insights:

-

Distinctive concurrent software: L40S considerably improved token latency (0.016s in comparison with 0.047s in A10G) and a better finish -to -end yield from 97.21 to 296.46 tokens/sec.

-

Larger concurrence (32 concurrent purposes): L40S maintained a greater latency (0.067 vs. 0.088s) and a yield of 331.96 tokens/sec, whereas A10G reached 258.22 tokens/sec.

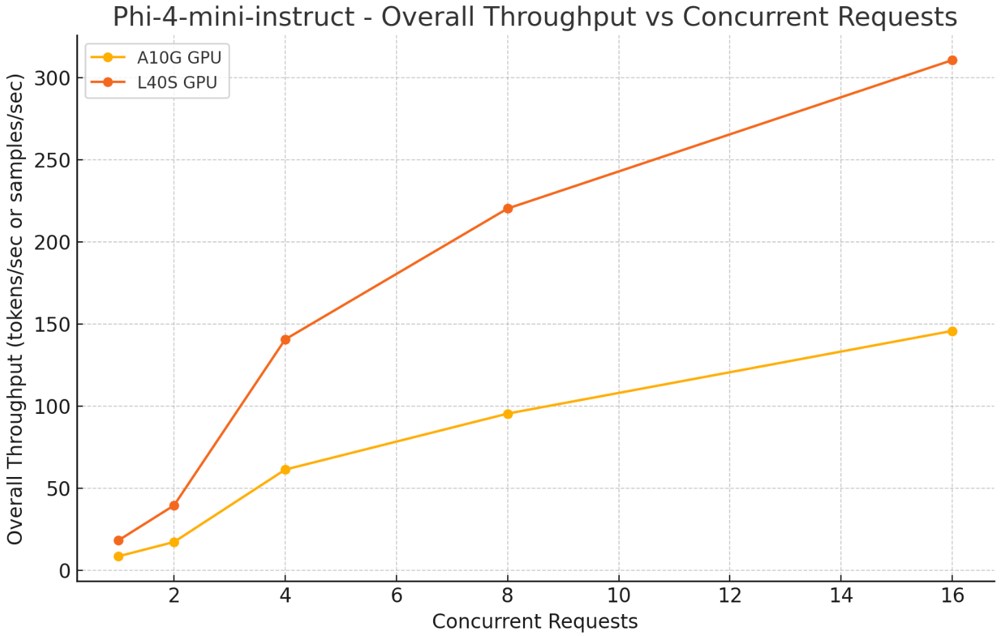

Fi4-mini-instructs

Confirmed eventualities:

- Concurrent requests: 1, 2, 8, 16, 32

- Entry tokens: 500

- Output tokens: 150

Key insights:

- Distinctive concurrent software: L40S reduces token latency from 0.02s (A10) to 0.013s and improved the final yield from 56.16 to 85.18 tokens/sec.

- Larger concurrence (32 concurrent purposes): L40S reached 590.83 tokens/sec of 0.03s latency per token, surpassing the tokens 353.69 of A10/sec.

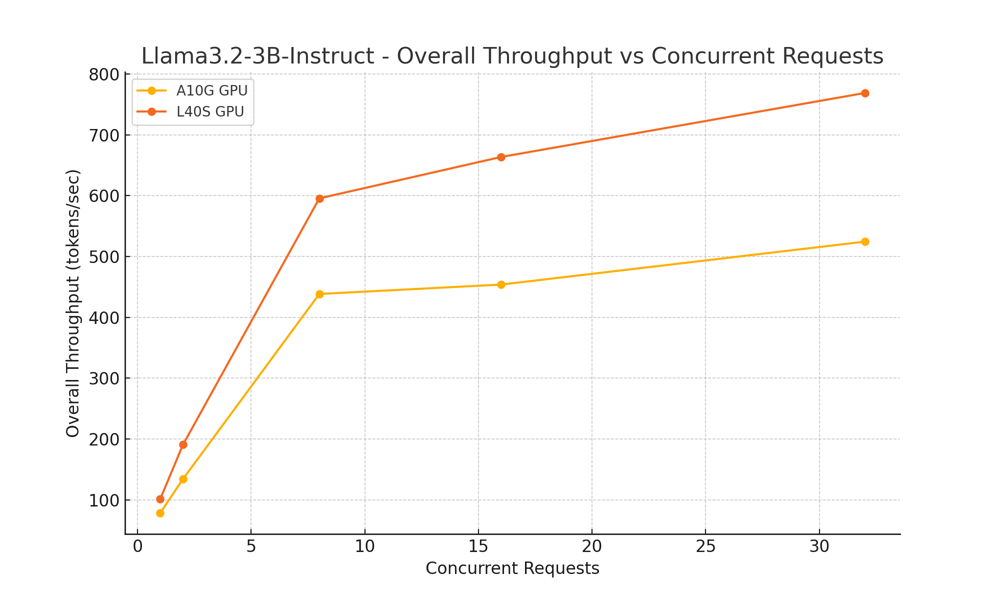

Llama-3.2-3b-Instruct

Confirmed eventualities:

- Concurrent requests: 1, 2, 8, 16, 32

- Entry tokens: 500

- Output tokens: 150

Key insights:

- Distinctive concurrent software: L40S improved token latency from 0.015s (A10) to 0.012s, with a efficiency that will increase from 76.92 to 95.34 tokens/sec.

- Larger concurrence (32 concurrent purposes): L40S delivered 609.58 tokens/sec of efficiency, surpassing tokens 476.63 of A10/sec, and a latency diminished by Token from 0.039s (A10) to 0.027s.

In all confirmed fashions, the L40S NVIDIA GPU always exceeded the A10 to scale back latency and enhance efficiency.

Whereas the L40S demonstrates robust enhancements in efficiency, it’s equally essential to think about elements similar to prices and sources. The replace to the L40S might require a better preliminary funding, so the gear should fastidiously consider compensation based mostly on their particular use circumstances, scale and price range.

Now, let’s take a more in-depth have a look at how the A10 and L40 are in contrast when it comes to costs.

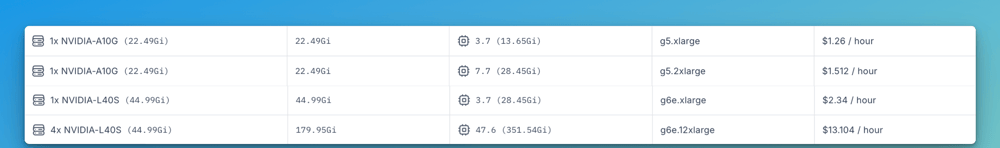

A10 vs L40s: costs

Whereas the L40S is extra highly effective than the A10, it is usually considerably dearer to execute. based mostly on Clarifai computing orchestration costsThe occasion of L40s (G6e.xlarge) prices $ 2.34 per hour, nearly twice the price of the occasion outfitted with A10 (G5.xlarge) at $ 1.26 per hour.

There are two variants obtainable for A10 and L40s:

- A10 It is available in G5.xlarge configurations ($ 1.26/hour) and G5.2xlarge ($ 1,512/hour).

- L40S It is available in G6e.xlarge ($ 2.34/hour) and G6E.12xlarge ($ 13,104/hour) for bigger work masses.

Select the proper GPU

Deciding on from the NVIDIA A10 and L40S will depend on your workload calls for and price range concerns:

- Nvidia A10 It’s appropriate for corporations in search of a worthwhile GPU able to dealing with blended work masses, together with AI inference, automated studying {and professional} visualization. Its decrease power consumption and stable efficiency make it a sensible choice for standard purposes the place excessive calculation power just isn’t required.

- NVIDIA L40S It’s designed for organizations that execute computing intensive workloads, such because the generative inference of IA and LLM. With considerably increased efficiency and a reminiscence bandwidth, the L40S provides the scalability essential to demand AI and graphic duties, which makes it a powerful funding for manufacturing environments that require first stage GPU power.

Scale workloads with flexibility and reliability

Now we have seen the distinction between the A10 and L40 and the way to decide on the proper GPU will depend on its particular use case and the efficiency wants. However the subsequent query is: how do you entry these GPUs to your AI workloads?

One of many rising challenges within the growth of AI and automated studying is to navigate GPU’s international scarcity whereas avoiding the dependence of a single cloud provider. Excessive demand GPUs similar to L40, with their increased efficiency, will not be all the time obtainable once you want them. Then again, though the A10 is extra accessible and worthwhile, the supply can nonetheless fluctuate relying on the area or the cloud provider.

That is the place Clarifai’s Calculate orchestration Enter. It offers versatile entry and on demand to GPU A10 and L40s in a number of cloud suppliers and personal infrastructure with out blocking it in a single provider. You possibly can select the provider and the cloud area the place you need to implement, similar to AWS, GCP, Azure, Victr or Oracle, and execute your workloads of AI in GPU teams devoted inside these environments.

Whether or not your workload wants the effectivity of the A10 or the facility of the L40, Clarifai routes your work to the sources you choose whereas optimizing for availability, efficiency and price. This strategy helps you keep away from delays attributable to GPU’s scarcity or worth peaks and provides you the pliability of climbing your AI tasks with confidence with out being linked to a provider.

Conclusion

Selecting the proper GPU is diminished to understanding the necessities of your workload and efficiency goals. The NVIDIA A10 provides a worthwhile choice for blended work and graphics work masses, whereas the L40 provides the facility and scalability vital for demanding duties similar to generative IA and huge language fashions. By matching your GPU choice together with your particular use case, you possibly can obtain the correct steadiness of efficiency, effectivity and price.

Clarifai’s Calculate orchestration It makes it simple to entry GPU A10 and L40s in a number of cloud suppliers, which provides you the pliability of scale with out being restricted by the supply or blocking of the provider.

For a breakdown of GPU prices and to match the value in several implementation choices, go to the Clarifai pricing web page. You too can be part of our Discord channel At any time to attach with specialists, reply your questions concerning the alternative of the suitable GPU to your workloads or get assist to optimize your AI infrastructure.