Many firms have heterogeneous knowledge platforms and expertise stacks throughout completely different enterprise models or knowledge domains. For many years, they’ve been scuffling with the dimensions, pace, and correctness wanted to derive well timed, significant, and actionable insights from huge and various massive knowledge environments. Regardless of various architectural patterns and paradigms, they nonetheless find yourself with perpetual “knowledge puddles” and silos throughout many non-interoperable knowledge codecs. Fixed knowledge duplication, advanced extract, remodel, load (ETL) processes, and sprawling infrastructure result in prohibitively costly options, negatively impacting time to worth, time to market, general complete price of possession (TCO) and return on funding (ROI). ) for enterprise.

Cloudera’s open knowledge lake, powered by Apache Iceberg, solves the real-world massive knowledge challenges talked about above by offering a unified, curated, shareable, and interoperable knowledge lake that may be accessed by a variety of instruments and engines. Iceberg suitable pc methods.

The Apache Iceberg REST catalog takes this accessibility to the following degree, simplifying the alternate and consumption of Iceberg desk knowledge between producers and shoppers of heterogeneous knowledge by way of an open-standard RESTful API specification.

REST Catalog Worth Proposition

- Offers open, metastore-agnostic APIs for Iceberg metadata operations, dramatically simplifying Iceberg shopper and metastore/engine integration.

- Summarizes the implementation particulars of the backend metastore for Iceberg shoppers.

- Offers entry to real-time metadata by way of direct integration with the Iceberg-compatible metastore.

- Apache Iceberg, along with the REST catalog, dramatically simplifies enterprise knowledge structure, lowering time to worth, time to market, and general TCO, and driving higher return on funding.

The cloud open knowledge lake homepowered by Apache Iceberg and the REST catalog, now gives the power to share knowledge with non-Cloudera engines securely.

With Cloudera’s open knowledge lake, you’ll be able to enhance the productiveness of knowledge professionals and launch new knowledge and AI purposes a lot quicker with the next key options:

- multi-engine interoperability and compatibility with Apache Iceberg, together with Cloudera DataFlow (NiFi), Cloudera Stream Analytics (Flink, SQL Stream Builder), Cloudera Knowledge Engineering (Spark), Cloudera Knowledge Warehouse (Impala, Hive), and Cloudera AI (previously Cloudera Machine Studying).

- Time journey: Replay a question beginning at a cut-off date or a snapshot ID, which can be utilized for historic audits, validation of machine studying fashions, and rollback of failed operations, as examples.

- Revert desk– Permits customers to rapidly repair issues by rolling again tables to state.

- Intensive set of SQL instructions (question, DDL, DML): Create or manipulate database objects, run queries, load and modify knowledge, carry out time journey operations, and convert exterior Hive tables to Iceberg tables. utilizing SQL instructions.

- Native desk evolution (schema, partition): Evolve your Iceberg desk schema and partition structure on the fly while not having to rewrite knowledge, migrate, or make utility modifications.

- Cloudera Shared Knowledge Expertise (SDX) Integration: Present unified safety, governance, and metadata administration, in addition to knowledge lineage and auditing of all of your knowledge.

- Iceberg Replication: Out-of-the-box desk backup and catastrophe restoration capabilities.

- Straightforward workload portability between the general public cloud and the personal cloud with out the necessity to refactor the code.

Resolution Overview

Knowledge sharing is the power to share knowledge managed in Cloudera, particularly Iceberg tables, with exterior customers (shoppers) who’re exterior of the Cloudera surroundings. You’ll be able to share Iceberg desk knowledge along with your shoppers, who can then entry the info utilizing third-party engines comparable to Amazonian AthenaTrino, Databricks or Snowflake that help the Iceberg REST catalog.

The answer mentioned on this weblog describes how Cloudera shares knowledge with an Amazon Athena laptop computer. Cloudera makes use of a Hive Metastore REST Catalog Service (HMS) carried out in response to the Iceberg REST Catalog API specification. This service might be made obtainable to your shoppers utilizing the OAuth authentication mechanism outlined by the

KNOX token administration system and utilizing Apache Ranger insurance policies to outline knowledge shares for shoppers. Amazon Athena will use the Iceberg REST Catalog open API to run queries on knowledge saved in Cloudera Iceberg tables.

Conditions

The next parts have to be put in and configured in Cloudera within the cloud:

The next AWS conditions:

- An AWS account and IAM function with permissions to create Athena notebooks

On this instance, you will see easy methods to use Amazon Athena to entry knowledge that’s created and up to date in Iceberg tables utilizing Cloudera.

Seek the advice of the consumer documentation for set up and configuration of Cloudera public cloud.

Observe the steps beneath to configure Cloudera:

1. Create database and tables:

Open HUE and run the next to create a database and tables.

CREATE DATABASE IF NOT EXISTS airlines_data; DROP TABLE IF EXISTS airlines_data.carriers; CREATE TABLE airlines_data.carriers ( carrier_code STRING, carrier_description STRING) STORED BY ICEBERG TBLPROPERTIES ('format-version'='2'); DROP TABLE IF EXISTS airlines_data.airports; CREATE TABLE airlines_data.airports ( airport_id INT, airport_name STRING, metropolis STRING, nation STRING, iata STRING) STORED BY ICEBERG TBLPROPERTIES ('format-version'='2');

2. Load knowledge into tables:

In HUE run the next to load knowledge into every Iceberg desk.

INSERT INTO airlines_data.carriers (carrier_code, carrier_description) VALUES ("UA", "United Air Traces Inc."), ("AA", "American Airways Inc.") ; INSERT INTO airlines_data.airports (airport_id, airport_name, metropolis, nation, iata) VALUES (1, 'Hartsfield-Jackson Atlanta Worldwide Airport', 'Atlanta', 'USA', 'ATL'), (2, 'Los Angeles Worldwide Airport', 'Los Angeles', 'USA', 'LAX'), (3, 'Heathrow Airport', 'London', 'UK', 'LHR'), (4, 'Tokyo Haneda Airport', 'Tokyo', 'Japan', 'HND'), (5, 'Shanghai Pudong Worldwide Airport', 'Shanghai', 'China', 'PVG') ;

3. Seek the advice of the Iceberg desk of carriers:

In HUE run the next question. You will note the two operator data within the desk.

SELECT * FROM airlines_data.carriers;

4. Configure the REST catalog

5. Configure the ranger coverage to permit “relaxation demo” entry to sharing:

Create a coverage that can permit the “rest-demo” operate to have learn entry to the Carriers desk, however won’t have entry to learn the Airports desk.

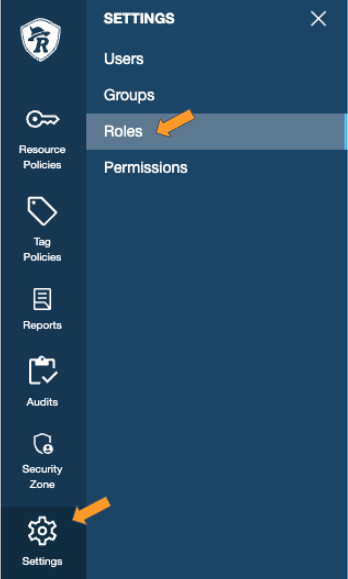

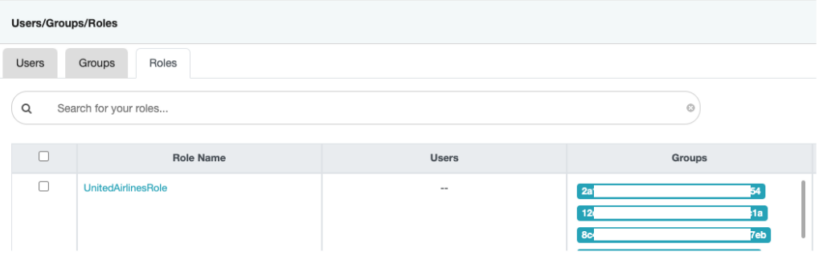

In Ranger, go to Settings > Roles to validate that your function is on the market and that you’ve got been assigned teams.

On this case, I’m utilizing a job referred to as “UnitedAirlinesRole” that I can use to share knowledge.

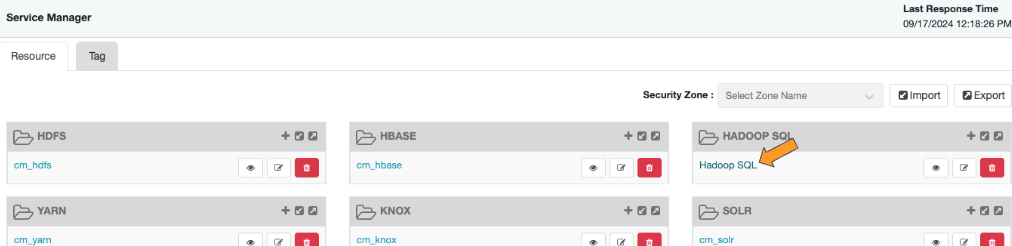

Add a coverage in Ranger > Hadoop SQL.

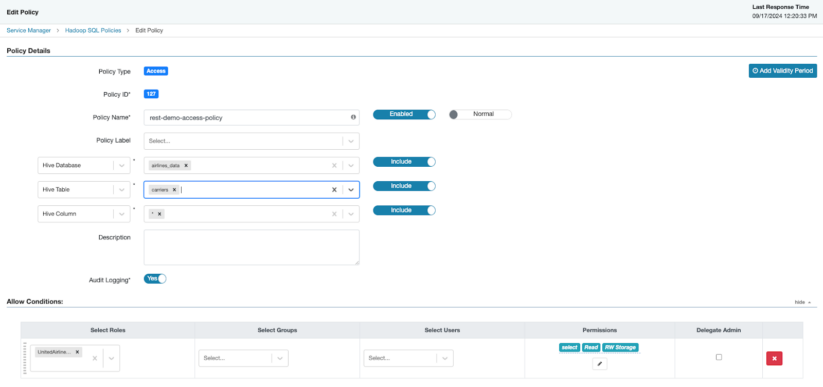

Create a brand new coverage with the next settings, ensure to avoid wasting your coverage

- Coverage identify: rest-demo-access-policy

- Hive Database: airline_data

- Beehive desk: carriers

- Beehive column: *

- Underneath permitted situations

- Choose your function in “Choose Roles”

- Permissions: choose

Observe the steps beneath to create an Amazon Athena laptop computer configured to make use of the Cloudera Iceberg REST catalog:

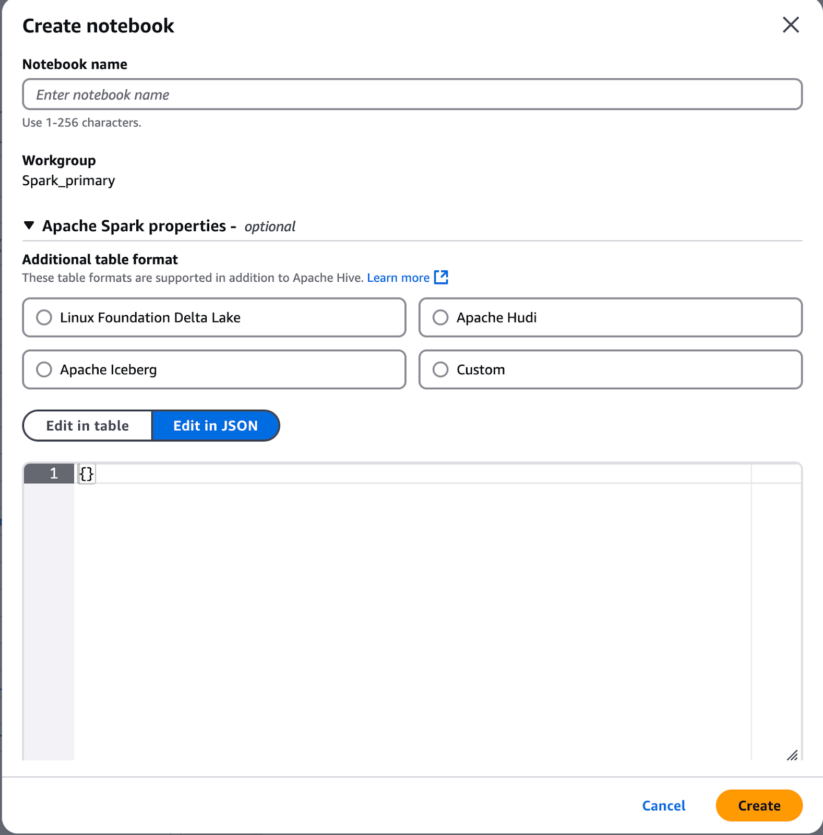

6. Create an Amazon Athena pocket book with the “Spark_primary” workgroup

to. Present a reputation to your pocket book

b. Further Apache Spark Properties: This can allow using the Cloudera Iceberg REST catalog. Choose the “Edit in JSON” button. Copy the next and change <cloudera-knox-gateway-node>, <cloudera-environment-name>, <Consumer ID>and <shopper secret> with the suitable values. See the REST catalog configuration weblog to find out which values to make use of for the alternative.

{ "spark.sql.catalog.demo": "org.apache.iceberg.spark.SparkCatalog", "spark.sql.catalog.demo.default-namespace": "airways", "spark.sql.catalog.demo.kind": "relaxation", "spark.sql.catalog.demo.uri": "https:/// /cdp-share-access/hms-api/icecli", "spark.sql.catalog.demo.credential": " : ", "spark.sql.defaultCatalog": "demo", "spark.sql.extensions": "org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions" }

do. Click on the “Create” button to create a brand new pocket book

7. Spark-sql Pocket book: Run instructions by way of REST catalog

Run the next instructions separately to see what is on the market within the Cloudera REST catalog. It is possible for you to to:

- View the listing of accessible databases

spark.sql(present databases).present();

- Change to airline_data database

spark.sql(use airlines_data);

- View obtainable tables (you shouldn’t see the Airports desk within the returned listing)

spark.sql(present tables).present();

- Test the service desk to see the two carriers at the moment on this desk.

spark.sql(SELECT * FROM airlines_data.carriers).present()

Observe the steps beneath to make modifications to the Cloudera Iceberg desk and question the desk utilizing Amazon Athena:

8. Cloudera: Insert a brand new report into the Carriers desk:

In HUE run the next so as to add a row to the Carriers desk.

INSERT INTO airlines_data.carriers VALUES("DL", "Delta Air Traces Inc.");

9. Cloudera – Question Bearer Iceberg Desk:

In HUE and run the next so as to add a row to the Carriers desk.

SELECT * FROM airlines_data.carriers;

10. Amazon Athena Pocket book: See the subset of the Airways (Carriers) desk for modifications:

Run the next question; you must see 3 rows returned. This reveals that the REST catalog will mechanically deal with any modifications to the metadata pointer, guaranteeing that you just get the latest knowledge.

spark.sql(SELECT * FROM airlines_data.carriers).present()

11. Amazon Athena Pocket book: Strive querying the Airports desk to test if the safety coverage is in impact:

Run the next question. This question ought to fail, as anticipated, and won’t return any knowledge from the Airports desk. The rationale for that is that the Ranger Coverage is being enforced and entry to this desk is being denied.

spark.sql(SELECT * FROM airlines_data.airports).present()

Conclusion

On this put up, we discover easy methods to arrange a knowledge alternate between Cloudera and Amazon Athena. We use Amazon Athena to attach by way of the Iceberg REST catalog to question knowledge created and maintained in Cloudera.

Key options of Cloudera Open Knowledge Lake Home embody:

- Multi-engine help for a number of Cloudera merchandise and different Iceberg REST-compatible instruments.

- Time journey and desk rollback for knowledge restoration and historic evaluation.

- Complete SQL help and on-site schema evolution.

- Integration with Cloudera SDX for unified safety and governance.

- Iceberg replication for catastrophe restoration.

Amazon Athena is a serverless, interactive analytics service that gives a simplified and versatile option to analyze petabytes of knowledge the place it lives. Amazon Athena additionally makes it simple to run knowledge evaluation interactively utilizing Apache Spark with out having to plan, configure, or handle. sources. Whenever you run Apache Spark purposes in Athena, you ship Spark code for processing and obtain the outcomes straight. Use the simplified pocket book expertise within the Amazon Athena console to develop Apache Spark purposes utilizing Python or Use the Athena pocket book APIs. Iceberg’s REST catalog integration with Amazon Athena allows organizations to leverage the scalability and processing energy of EMR Spark for large-scale knowledge processing, analytics, and machine studying workloads on giant knowledge units saved in database tables. Cloudera Iceberg.

For firms going through challenges with their numerous knowledge platforms, which can have points associated to knowledge scale, pace, and accuracy, this answer can present important worth. This answer can cut back knowledge duplication points, simplify advanced ETL processes, and cut back prices whereas bettering enterprise outcomes.

To be taught extra about Cloudera and easy methods to get began, see Getting began. Confirm Cloudera Open Knowledge Lake Home for extra data on obtainable capabilities or go to Cloudera.com for particulars on all the pieces Cloudera has to supply. Check with Getting began with amazon athena